Welcome to the kriha.org weblog

- First Security Day at HDM - from platforms to applications

-

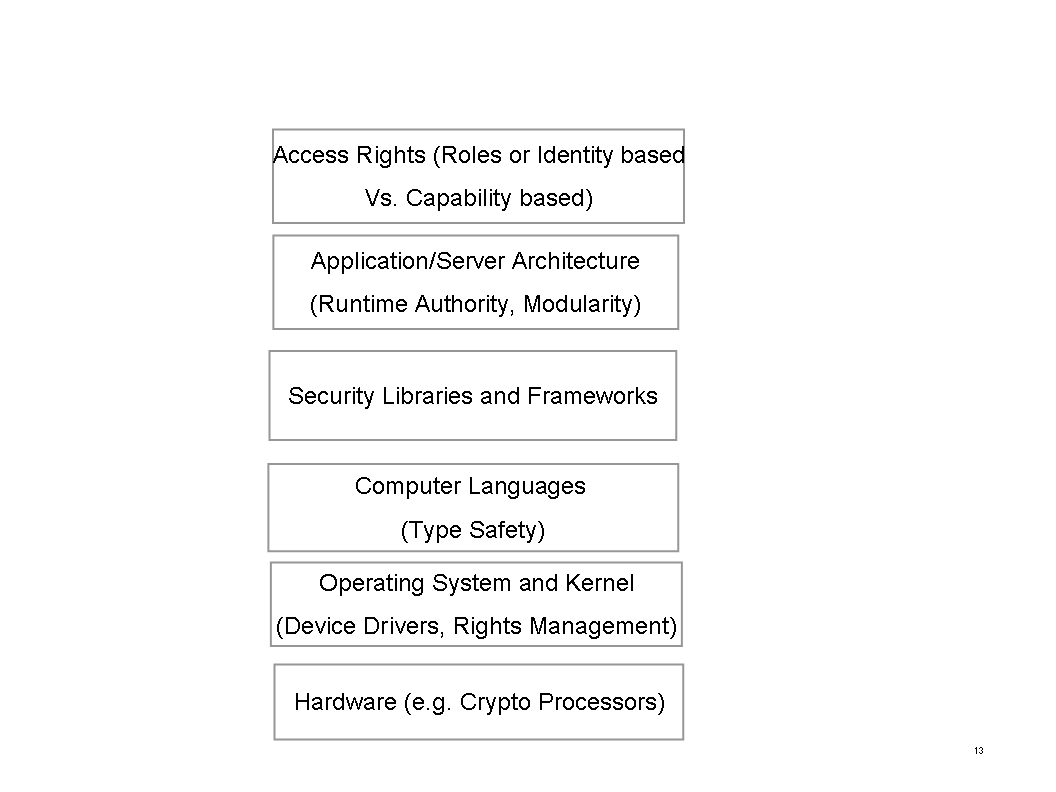

The Security Day will cover roughly four areas of security: platforms (which become increasingly important as we get the internet threat models under control), infrastructure (which shows limits, e.g. firewalls), applications (currently the hottest topic of security research and threats) and last but not least crpyto-related research.

HDM members as well as external specialists will demonstrate the latest in security research and development. How do you apply electronic signatures in real-live applications? How do you run a host-based IDS and what will you gain by doing so? What are the consequences for maintenance and application development? What's new in Windows Vista with respect to security? How do mobile DRM systems work? How do you run the security of a large provider?

There should be something for everybody interested in security and there will be time for discussions on current threats, developments and countermeasures. Viewers on the internet can use a live chat channel to raise their questions.

This is the first security related event at HDM, organized by the CS&M faculty. There was a huge number of speakers interested in participating and we could only select a few this time. So you can expect another Security Day in the near future.

Note

You will find the program for the Security Day at HDM homepage. We will start at 9.00 on the 12th of January. As usually the event will be streamed to the internet.

- The problem of many speakers...

-

Events with a large number of guests currently suffer from our inability to capture the voices from speakers besides the main speaker(s) in front of the audience. Right now I am running around with a microphone and in the case somebody just starts to talk (like in a lively discussion) we need to yell "stop" and the speaker has to wait for the microphone to be brought to him.

I would love to see a solution for this problem because it is both tiring and kills every discussion just for the benefit of our internet viewers. Our audience would not need a microphone in most cases as the rooms are not this big. But the internet viewers do no hear the questions if we do not keep strict microphone discipline.

Unfortunately there seems to be no good and affordable solution to this problem. The solution should also be mobile btw. as we won't be in the same room with every event.

Video seems to be so much easier than audio - just point the camera at somebody. But how do we capture audio? I have seen long sticks with microphones attached. Really ugly because it threatens participants which are sitting under those sticks. Permanent installations are too expensive and we would need them in several rooms.

The following ideas try to solve this problem. The first one is based on balloons floating at the ceiling of a room. The microphone is attached to an extensible string that hangs down from the balloon. A speaker can grab the cord and pull the microphone to him.

There need to be several of those ballon-microphones in a larger room so that all speakers have a chance to talk. Each microphone should be able to work like a phone-conference microphone (which means it should serve around 4 people in one position concurrently). Around 6-10 people should have easy access to the microphone dangling from the ceiling.

Another idea is a throwable microphone. It has the same group conference abilities as described above but can be thrown (better: easy and fast hand-off to other speakers) around. Typically discussions evolve between groups and throwable microphones would reach those groups quickly.

An important aspect of both solutions is how the sound engineer can identifiy which microphone is in use. The throwable microphone would have a button that needs to be pressed while speaking. This will automatically suppress all others and signal the working microphone to the sound engineer. The balloon solution could do the signaling via coloured lights.

Anyway - if you know a better solution for this problem, please let me know. But before I forget: the requirements are as follows:

Affordable on a university budget (which means in our days: dirt-cheap) Mobile - we need to use it in different rooms and cannot go for a permanent installation Superior usability - this goes with mobile and the fact that students and myself need to run it after a few minutes of introduction. Easy to build and store does not require personnel (as the stick approach e.g. does) - Things learned on Games Day

-

Our so called "days" at HDM are supposed to bring new ideas and developments into our faculty. The second Games Day was no different in this respect. But this time new trends and developments became very visible - like the need for distributed development - and the last presentation might even push the faculty into new ways to organize learning in projects: cross-faculty, cross-university teams of different areas working on one goal. But let's tale ot step by step.

If there was any doubt about how professional games development has become Louis Natanson from our partner-university at Abertay Dundee was able to destroy it quickly. He showed the structure and organization of a bsc and master program of game development. It is clearly based on a very good knowledge in general computer science topics. On top of that students learn (mostly in groups) the key factors of modern games development: concept art, business functions and last but not least how to create large games.

And there is more: students all over the world can compete in Abertay games competitions. (see: Daretobedigital) Louis is also trying to get a collaboration between universities going that would allow students to get the finishing touch of game development at Abertay while laying the foundation at their home universities - definitely something we will look into here at HDM.

Another interesting thing are the certifications students of games development can get in the UK. Looks like the games industrie and universities like Abertay really have established a core concept of what it takes to be a games developer.

Like the computer science and media department at HDM the games department at Abertay strongly believes in team based projects and a strong foundation in comuter science.

This is another lesson to be learned from our Games Day and it was delivered through Stefan Baier of Streamline Software in Amsterdam: the future of multi-media productions already IS very much a distributed process - both with respect to programming and content. Stefan Baier gave a very interesting talk on the demands of those development teams: they consist of a large number of specialists in different areas and work together from different locations. The production process seems to be closer to movie productions and IT project management may not be the right methodology to cover the whole process

Artists and technical people need to form ad-hoc virtual organizations. They need to communicate frequently and open. Tool standards are important but not a reality nowadays. The degree of outsourcing is mind boggling: one company renders buildings while the other renders the roads and traffic signs for the same frame.

Distributed content production does not stop there. Different content from different media is now integrated into games via wikis, homepages etc. and become part of the game.

How can we prepare our students for this type of environment? Perhaps the answer will come from the last presentation of the day - our own games project at HDM (see below).

Stefan Baier mentioned that consumers have ever increasing expectations on games, especially the 3D graphics. Some game companies are getting scared and fear that they cannot answer those expectations in the future. But help might come from hardware sides: Michael Engler of IBM Böblingen demonstrated the powerful features of the new Cell chip - which e.g. powers the new Sony Playstation 3. It is a mean machine: a general purpose risc core (power Pc) and 8 vector units which achive around 200 Gflops/sec.

Michael Engler demonstrated realtime ray-tracing, realtime CT slicing etc. - but the core question is: how difficult is it to adjust to this new hardware? It looks like that it won't be very difficult because many standard interfaces (opengl e.g.) have been ported to the platform. The new SDK2.0 has just been released and if we are lucky we will have a project for the Cell chip beginning next term at CS&M.

Then the next "aha" effects where just around the corner: Jan Hofmann and Oliver Szczypula, professional sound designers, have just finished their studies of audio-visual media at HDM and they gave us an introduction into sound and music development for games. They showed lots of examples for sound icons, atmo music etc. from their own production "Ankh" (which received lots of prices and honours) and other current games. The level of quality achieved is amazing and they showed how hardware evolved to deliver this quality in realtime.

Before the talk audio and sound in games was not really a concern for me (I am not even sure whether I distinguised properly between them). I learned a lot from this talk. The most important piece being that audio is the major factor not only for emotions in games but also for steering players through levels, transporting game events, making players aware of game things etc. Audio and sound for games are completely different from regular productions - even though e.g. the audio parts are more and more created through professional orchestras like in the case of Gothic3. Audio and sound in games needs to follow the camera position and needs to be dynamic therefore. On top of this problem audio and sound can start fighting with each other. In those cases the speakers may become hard to understand. This means audio and sound need to be carefully aligned with game flow - stop when big things happen or carry players across changing scenes. Audio and sound are core game elements and the existence of audio engines or sound engines is therefore simply a necessity. Unfortunately many gamers and players just think about the 3D games engines and forget about the audio.

Another interesting result of their talk was the idea to involve the games company both are currently working for in our third Games Day in the summer term. I will be looking forward to some interesting demos.

After a lot of technology and games economy stuff we took a dive into the social consequences of gaming. Petra Reinhard-Hauck showed positive aspects of games (better motion control, social experiences, computer know-how) as a result of current studies in media. The numbers Dr. Reinhard-Hauck showed where very interesting as they also showed a big difference between girls and boys in the way the computer is used. Is the reason for the low numbers of women in IT somewhere hidden behind those numbers? Looks like the next Games Day should have a talk on "women in games"...

Dr. Reinhard-Hauck caused quite a number of lively discussions between our guests and students on the effects of games. There was a number of students which emphasized negative aspects as well like the loss of social skills and the dangers of getting lost in the virtual world. Most attendends agreed though on the statement that games alone cannot be blamed for school shootings. The fact that the Stuttgarter Zeitung sent a journalist to our event and ran an article on Dr. Reinhard-Hauck in todays SZ just demonstrates the social and political importance of computer games.

The last presentation of the day was on our own large-scale games project "the city of Noah". Started by Thomas Fuchsmann and Stefan Radicke (who also got us in touch with the previous speaker Dr. Reinhard-Hauck - many thanks!) the project has now attracted specialists from different areas: story design, sound, music, programming and last but not least project management. 30 students from HDM and University of Stuttgart are working together on this ongoing project.

Besides all the artistic and technical elements of this project there is one point that seems to stick out because of its importance for the project overall: project management. The students reported that the skill learnt in IT-Project Management (by my friend Mathias Hinkelmann) were an invaluable asset for this type of project. The high degree of parallel development (programming, concept arts, music) is certainly a problem because all these parts somehow depend on each other. In this case it meant that the musicians have to image most of the game flow and create sound and music in advance.

A very interesting fact that the students pointed out was that the overall management of such a projects seems to require the skills of movie directors - something Stefan Baier hat mentioned before in his talk as well. IT is only a part of such a project and perhaps not even the most important one. Much to the surprise of Louis Natanson the students did not have any problems between the "artists" and the "programmers" in this project. Many projects are plagued by differences between the creative and the constructive (;-) groups. There is perhaps another lesson to learn behind this fact: does open (source) organization prevent some of the typical fightings?

Given the current problems of organizing such a big project the next idea seems to be rather crazy: the next goal could be to start distributed games development as a grass-roots effort between universities. Because you can only learn distributed development in a truly distributed environment. We would have to acknowledge the cost of communication overhead in those types of environements - but that is just what needs to be learned.

At HDM we have to do some homework now: how can we support such cross-faculty teams better? Students of all faculties should have the option to participate in such projects and get their respective ECTS points for this. How does faculty staff interface with those projects? How can we help and guide while still letting the students decide?

The games project has the potential of changing the way we study and work at HDM. It could change this toward even more collaboration between students and staff and between students - beginning with the first term. The future definitely is collaborative and an important part of this is the language to understand each other. In most cases nowadays this will be english -whether you like it or not. And I was quite pleased that the first three talks were in english because it demonstrated what sill one needs to have to be successful in our business. Which leads to the next question: how do we increase language skills with our students?

- In the name of security - or how your past will haunt you

-

My friend Sam Anderegg pointed me recently to an interview with a representative of a security company specializing in "background" checks on personnel. The case in question was a security incident that happened at Paine-Webber a couple of years ago. A disgruntled sys-admin quit but nor before placing some malware on more than 2000 server machines. Paine-Webber had a hard time to fix their machines and to guarantee the correct releases of software being installed.

The representative from the security company claimed that a simple "background check" would have revealed so much information on the employee that he would have never become a sys-admin with root privileges within Paine-Webber. He mentioned a drug offense in the SIXTIES!! as one of the facts that could be found easily within 24 hours using public information from the web.

But the representative went way beyond that. He told that not only criminal records should be locked at. Also private credit information, educational records etc. should be evaluated by companies. And not only once but repeatedly because employees might get promoted.

This story has some well known ingredients: an abundance of private information that is not protected from abuse. Private theories on what makes a person unreliable (how old can an offense be to still be of value? What are "critical" offenses? A security company trying to make money by distributing fear (what, you don't do "background" checks in your company?) and so on.

In the name of security there seems to be no stopping companies from violating even basic human rights. Last night I read that the CIA tapped Princess Dianas phone in the Hotel the night she died. Is there still anybody around to question the practices of those secret services? Or does the (remote) threat of terrorism justify anything?

Discussion on Slashot: Slashdot

But there is also a technical part to the story: how could one person control ALL the IT infrastructure of the company? Banks use clever mechanisms in case one person alone cannot be completely trusted: the famous four-eye-principle e.g. or the use of several keys for a safe. Why aren't those mechanisms used in IT? As long as we use the concept of a global admin we will inevitably have the danger of disgruntled employees to cause havoc with our systems. And no amount of secreening will prevent this as we cannot predict a human beings actions.

This means that our systems need to apply POLA on all layers. Once the damage one person can do is reduced the social and political dangers of the screening process described above can be easily avoided. Right now - based on our vulnerable systems - the screening gives a false sense of security and is a danger for our society at the same time. Time to put pressure on our platform vendors.

- 2. Games Day at HDM - Technology, Arts and Economics of Computer Games

-

Politics and media seem to have found the final culprit behind troubled students: computer games. Of course it is easier to blame a couple of games for shootings at schools than to fix the many problems that plague our schools nowadays. Lack of money, social problems of the new "Unterschicht" which has been carefully created in the years of Kohl and Schröder and so on.

The second games day offers an enticing mix of talk on various aspects of games development, including positive aspects of computer games, cell chip internals, game economics and sound in games. Louis Natanson from Abertay University will present their studies on games and creativity. The program is designed for a larger audience from different faculties, the industry or even game fans.

Note

You will find the program for the Games Day at HDM homepage. We will start at 9.00 on the 15th of December.

- WEB2.0 at HDM - get ready for your dose of tag soup

-

The event coming friday is very special for several reasons: it has an excellent list of speakers ranging from social sciences to hard-core technology. But that is not all. This event has both external specialists (e.g. STSM's from IBM, Adobe specialists etc.) but also some very good internal members of CS&M who will demonstrate the level of knowledge available at HDM. And but not least the event is a WEB2.0 event: it has been planned collaboratively with students and collaborative WEB2.0 tools have been used extensively (writeboard, project management).

When I look at the program and how it got created it is absolutely clear to me that our approach of organizing such events together with our students is the right approach. It avoids me becoming a bottleneck due to a lack of time and it teaches event-organization effectively - something our students will need when they work in technical areas later. The approach got started with our first games day last semester and it seems like it would be able to continue for a long time.

Note

You will find the program for the Web2.0 day at HDM homepage. We will start at 8.30 on the 1st of December.

- Beyond SOA - event-driven architectures

-

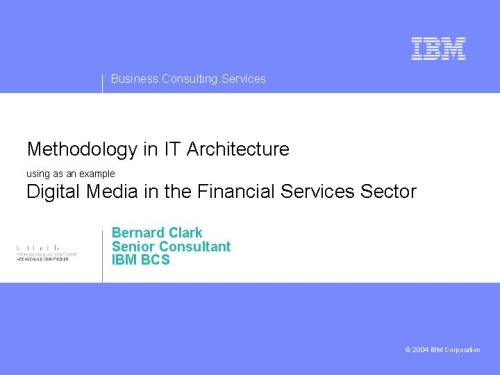

Our SOA seminar with IBM had lots of good talks on enterprise architectures and problems. The knowledge needed to work within the bounds of a large, international enterprise is staggering but where do you learn the terminology and technology needed? From past experience I'd say there is a lot of learning by doing involved. But how do you prepare in a systematic way?

There is some good literature available and I'd like to discuss two very special books on the topic of enterprise software. The first one is the seminal work by Martin Fowler (who I met years ago in Basle) "Patterns of Enterprise Architectures". Fowler knows business patterns very well and this book will take you through all the tiers of enterprise software. It will teach you how to organize GUIs, business logic and data storage. It has a lot of information on how to structure information and objects to create fast and flexible applications.

The second book is quite the opposite with respect to structure: it has its focus on interaction patterns. "Enterprise Application Integration patterns" is a treasure chest for people in need of integration know-how. Gregor Hohpe and his colleague take you through all the technologies needed to decouple applications and create maintainable but secure and reliable software based on messages. The icons shown at the IBM SOA seminar did remind me about Hohpe's book and I am glad I had a look at the Enterprise Integration patterns homepage because I found a wealth of very good articles on event-driven architectures. Hohpe's paper on EDA is on of the best introductions to this topic that I saw yet. It discusses the following topics:

Call stack architectures and the hidden assumptions behind them (speed, sequential processing, coupling between caller/callee) , coodination, continuation and context as the core principles of call stack architectures Assumptions as the major tool to analyze de-coupling (do you know what creates coupling between components?) The different way state is handled in EDA systems: participants store events they think they will need later. This creates replicated state automatically. I loved his example of an order received: The posting of this event creates a number of related postings, e.g. the shipping address. The shipping service will collect those postings and use them later. Speaking of events: the abstraction level of event-driven architectures can be higher than SOA systems: In SOA services are explicitely called. Callers may not need to know the components behind the service (e.g. checkCreditCard) but they need to call that service from within their code. A simple posting on a higher abstraction level (e.g. "Order received" avoids this tight coupling. Take a look at the diagram of call abstractions ranging from calling a component over calling a service to just posting an event. The power of asynchronous systems shows in many of Hope's examples (e.g. plug in tracing and monitor components into an enterprise bus or replay events in case of compensating actions. But his Starbucks example is hilarious. By a happy coincidence I use the "Sutter-begg" in Basle SBB on a regular base and it works almost like Starbucks: Once you arrive you need to yell you choice of coffee to the casher who in turn yells it to the baristas working in the back. But unlike Starbucks the casher seems to remember who ordered what and in most cases you are correlated with you choice of coffee properly. When you get your coffee you will pay - not before. This is good because only once you get the coffee you will need access to the (scarce) resource tablespace (no, not Oracles, Sutter-beggs tables) to prepare it (sugar etc.). And then you are right in front of the casher and might pay as well.

This example shows different strategies to handle the orders (half-sync/half-async) and different failure strategies (throw coffee away if delivered wronly - its not expensive to use this strategy but it speeds up things a lot compared to a two-phase-commit protocol. So take a look at the article "Starbucks does not use two-phase-commit".

Hohpe is not just painting a pretty picture: there are still subtle dependencies between communication partners in an EDA system and somebody will need to keep track on message formats and semantics, on subscriptions and postings to e.g. avoid registering for queues and topics which do not exist in the system. Another nice case where abstraction and information hiding (and de-coupling is a rather strong way of information hiding) needs to be balanced for the reasons of system management and security.

Speaking of which - I am currently pondering over mixing object capabilities with event-driven architectures. It seems to be the case that we absolutely need the concept of information besides capabilities - as it is e.g. modeled in the theoretical apporaches of Fred Spiessens et.al. The capability will control the channels and topics available to subjects.

- XLINK or AJAX?

-

I have not been following the XML-DEV list or XML development in general for a long time so please forgive me if I am beating a dead horse here...

Working on a book on software security I took a look at some Ajax technologies and their security implications. There are many different ways to contact servers and embed content into pages (XMLHttpRequest, dynamically added script tags etc.). And I was wondering: lots of code - and no tags?

That reminded me about Xlink - the one standard that surprised me completely by taking a long time to get finished and then to disappear (or did I miss something here?).

I was a big fan of HyTime and its powerful addressing and linking expressions. Looks like we got the addressing part (Xpath) but where is the linking? I guess the linking and embedding is now done with AJAX - in other words with code instead of a descriptive way.

I am not saying that all things in Web 2.0 could be done descriptively.But I am disappointed that it is not used at all (am I wrong here?)

Some ideas about the reasons: Did XLink in browsers raise too many security issues? (Cross-domain, same origing etc) If so, I don't see anything different if this is done in code instead of tags.

Tags would allow us to express the intentions more clearly and the implementations in agents are probably more stable than individual script code. (testability etc.). And there is the social side as well: many who publish today on the web learned it from looking at HTML source of other sites and copying the tags. But the skills to master large code bases in Javascript are not available to everybody.

Don't get me wrong: I sure like what can be done in Web2.0 today. But I am not so sure about the way it is done with code. To me it looks a bit like we have given up on the idea of information aggregation through tags. But if descriptive HyTime was too complex - doesn't the code have the same complexity just hidden somewhere?

If somebody could point me to some discussions on this topic I'd really appreciate it.

- Latest Awards for CS&M Students at HDM

-

Ron Kutschke - co-author of tradingservice - has received an award as the best graduate at HDM in 2006. His thesis has been advised by Stephan Rupp (now Kontron) and myself. Matthias Feilhauer has won the Carl Düisberg Gesellschaft (CDG) price for his thesis on electronic waste exports in developing countries (mentored by my friend Prof. Rafael Capurro)

- Web2.0 security and Google Maps, how does it work?

-

Google Maps is clearly a Web2.0 application which is loved by many. And many site owners integrate their content with Google Maps. Look at this example from sk8mag.de. But it also raises a couple of questions on the security behind Web2.0. A core element of AJAX or Web2.0 is the XMLHttpRequest - an asynchronous way to contact a server and get data for the current DOM, without the need to re-load the page. Following the "same origin principle" XMLHttpRequest is restricted by browsers to only call back to the server where the page came from.

But this would not allow sk8mag to integrate the maps. So google must do something else. Now there is a thing called dynamic script tags. In connection with JSON (javascript object notation - a way to serialize java objects) there is supposedly a way to contact ANY server from within a page and get data. But istn't this dangerous?

The two most common risks in such a case are about credentials (e.g. cookies) of the current page being exposed. And whether it would be possible to use a browser as a bridge between intranet and internet. lets say a user opens a browser window on the Internet, downloads Javascript with XMLHttpRequest code which then goes to a URL in the intranet and extracts data. Authentication requirements for the intranet would not help because the user is already logged in when SSO is used (the dangers behind automatic and transparent sign-in are a topic for usable security as well).

To make a long talk short: is there anybody out there who can explain to me how google maps works in the context of Web2.0 technologies? Any help would be seriously appreciated as I still got a lot of other things to do for our security book.....

To me it looks like we need to redefine browser security completely in the context of Web2.0 techniques. And do security inspections differently since now it is possible to change javascript code AFTER page load. I have collected a number of interesting links about AJAX security:

The whole topic seems to be a mixture of usability (seamless integration of different sites) and security (credentials, authority etc.) and while users might love the aggregation power of AJAX it needs a re-thinking of browser security in general. It can't be that servers completely take over the responsibility for the security of clients...

- Lyin' eyes: how they take our freedom away, more...

-

The cat jumps out of the bag:data from the german toll system are going to be used by "law enforcement" (police, bnd, mad, cia and so on). NOOOOOOOOOO! Toll data will never be available to "law enforcement" - that was when the toll system was introduced. The data were protected by law - and laws can change (look at the job card design - it suffers from the same weakness). The whole approach is called the domino system: step by step, piece by piece data are first collected and then (ab)used. First only in "severe cases of criminality", then always. And of course, "law enforcement" is quick in finding reasons for data access and how much better they could protect us if they only could.... This has always been the case and the public laws were made to protect us FROM "law enforcement". Schneier is right: whenever data are collected they will be used for other purposes as well. That is WHY THE LAW DOES NOT ALLOW DATA COLLECTION AND POLICE ACTIVITY WITHOUT DUE CAUSE! This was one of the most important principles put in place when states changed from monarchies and tyrannies to democracies!

Now the political caste wants to do away with this principle. Beckstein is the best as always (closely followed by the always agreeing SPD politicians which seem to change their position without any problems. Remember: this is supposedly the same party that had an Otto Wels take a stand against Hitler in the Reichstag and who's members suffered badly from Gestapo and police in the third reich.) Beckstein argues that telephone surveillance is leagal too. Yes, but at least today "law enforcement" still needs a due cause and in most cases even the approval of a judge to do so. And people are entitled to know about the surveillance at a later point in time.

But perhaps Beckstein simply thinks ahead: the EU wants to collect data WITHOUT cause for three years: Telephone, internet, you name it. So one could say that using toll data for "law enformcement" is just to make us compliant with future EU laws (;-). I wonder why they don't use this argument yet.

Did I mention that Becktein also wants a toll system for cars? Yes, but ONLY with vignettes - for now....

There is a massive shift underway to allow "law enforcement" general activities without due cause - something that has been reserved for secret services till now and carefully protected as it is a dangerous right. The Nazis introduced the famous "Blockwart" system: Every block/street had a person assigned to watch and spy over the inhabitants of this block. This spying happened without due cause, every day. The Gestapo used the data collected for their purposes. I wonder when Beckstein is going to propose a "democratic" version of the Blockwart system.

Armin Jäger CDU said: it has always been a principle of the CDU to put security and law-enforcement above data protection. Don't they ever learn a thing from history?

- Modeling of operational aspects and how to get wheel chairs mobile

those where two recent thesis works I'd like to present here. Mirko Bley developed a domain specific language to cover operational aspects (deployment, runtime) in the large PAI infrastructure project at DaimlerChrysler. He shows the modeling problems behind a DSL, how a DSL is used to generate artifacts using eclipse tools and shows how constraints are currently handled. The thesis gave me a lot to think about, e.g. whether we could use the same approach to model security aspects and how we could use the large infrastructure project in systems management and generative computing classes of our computer science master. The title of the thesis is "Modeling of operational aspects in System Infrastructures" and is an excellent way to learn about DSL development and associated tools. BTW: Mirko decided to not use UML as it does not cover operational aspects currently. SysML seems to be much more geared toward system architectures and desing and not so much towards operating systems.

Verena Schlegel has been working on usability problems for quite a while now. In the past she and her colleagues had won prices for re-engineering large applications for usability. For her thesis she decided to work at Fraunhofer IAO in the ambient intelligence project. Her job was to develop and test a user centered design for wheel chair users and their mobility problems. She designed a site which aggregated information important for wheel chair users (like transportation, toilets, information etc.) and tested it empirically with specially created test materials. Tons of ideas about this as well: how to integrate web 2.0 technologies here? How to model and test user conceptual models? Every picture, term and process in this design had to be tested for usability. A special problem are complex planning processes, e.g. planning a larger trip. We don't want to overwhelm the users or let them drown in steps and towers of information. The techniques Verena Schlegel used to reduce the complexity of planning processes should be studied be every student working in process modelling.

- On war, criminals and talking straight

A good friend and colleague of mine - a very gentle person - took his family to Lebanon two days before Israel started the war against the Lebanese nation. He and his wife are from Lebanon and every year they go back to see their parents which live in the southern part of lebanon. He is back now - after spending two days hiding from bombs he took his wife and three year old daughter on a dangerous trip to Damaskus and left as soon as possible. No chance to see his parents which are now living in cellars. And now Israel announced that the people in southern Lebanon have to leave: "leave now or get killed - it is your choice".

I talked to my friend yesterday and got a real-life account on what is going on in Lebanon right now. Not what google news is telling us currently, but I get to this later. My friend told me that this is an outright war against the lebanese people. The Israelis do not distinguish between civilians and hizbullah or army. The death count under civilians is rising every day and is now already exceeding 400 (including UN personnel which is attacked nevertheless). And this is before the ground war really started. (Even though I read in german media that soldiers from israel are already "working" (yes! they media called it working) since days in Lebanon)

The infrastructure in Lebanon - built in many years after the last devastating war -is purposefully destroyed by Isreal. Wells, bridges, everything. A whole generation of Lebaneese young will have to work soleyly to rebuild what is destroyed now. This is NOT a military attack. This is the same war crime as the allies committed against Hamburg, Dresden and other places in world war II.

US backed Isreal seems to have lost all reason. No - the actions are very much planned and make a lot of sense given the right perspective: It is unknown what will happen in the US elections in fall. Perhaps the current elite of oil/military/religious leaders will lose the election. Better to hit now.

The strategy: it is well known and it has two components. The first component was executed successfully during the last couple of month: It is called destabilization. Make a few spectaculars concessions but at the same time do new settlements on foreign land and declare that you will never get out of other illegal settlements. Allow an election but when you don't like the outcome just throttle the palestinian money supplies. Ever seen a government run a country successfully without money? And this on top of the permanant humiliation that Palestinians receive through israelian forces. Klaus Theweleit ("der Knall") describes such strategies of de-stabilization used by western politicians in the war at the balkan.

Another excellent work on how to start a war is Manfred Messerschmidts study on how the US entered WWII in the pacific area. We all know Pearl Harbor - but who talks about the US cutting the Japanese off from their oil supplies first? Can you survive without OIL being an industrialized nation?

The second component is to claim self-defense as the reason for attack. This has been executed beautifully by Hitler ("Vorwärtsverteidigung", Bush, Isreal and many others in the past. Take some minor event (carefully nurtured during the first phase of de-stabilization) and blow it completely out of proportion. Did you ever compare the number of people killed in the so called 9/11 event with the current death toll in Irak? Two kidnapped soldiers against hundreds of dead civilians? The number of Israeli killed vs. the number of Palestinians? This will at least show you who REALLY is in power.

Strategies are based on long-term socio-economic developments - a thinking that german historician Hans-Ulrich Wehler has made very popular in the german historical science. One of those developments is the lack of an enemy after the eastern block broke down. This is not socio-economic? Yes it is: it directly translated into a and spending problem for the military and industrial elites which currently rule the US. And of course the same goes for the european military and defense industry. Luckily a new enemy could be built within the last 20 years: terrorism. Remember - it always takes two to tango. Terrorism is easily created by simply suppressing others to the point where they are helpless and have no other option left. Just what Isreal does in Palestine.

The other big development is the fight for oil. China needs it desperately to sustain its growth and the US can throttle China through access to oil. And here we see the bigger interests behind the war on Lebanon: it is a way to carry war into Syria and Iran - just do some air raids into their territory and wait for the results.

Another long term development is of course the occupation of palestine after WWII. Started as a terrorist operation (at one time Israel wanted to kill Adenauer as has been recently uncovered) it is now calling everybody else terrorists. Three years ago I talked to a jewish priest on a train ride (strangely he was also a catholic priest!). He gave me the Israelian view on ownership and rights in the middle east: TWO THOUSAND YEARS AGO this area was jewish and thats why Isreal owns it now. It is hard to say something against such a raging nonsense. But as with many so called religious people I have met I noticed that below the (religious) surface there is an abolute brutality against non-consenting people.

Given the historical context it always strikes me as strange how easily politicians talk about the "undeniable right of existence for Israel" - is there a natural right for violent occupation somewhere hidden in human rights?

So where does this put us germans in the whole mess? Is it OK to be anti-Israel - given our "special historical relation" as it is usually called by german politicians? It is not only OK it is right. Isreal is an aggressive state that uses torture and brutal military power against civilians. It is NOT interested in self-defense. It has NO interest in peace in the middle east. Peace would also conflict with the US goals in that area.

Being a prof. who talks to students every day the cowardice of german politicians pisses me off extremely. Politicians seem to be low-lifes by nature (just look at the recent Röttger debate: a member of parliament who was also elected head of the german industry lobby and did not want to give back his MP mandate. He saw no conflict between roles here. The funny thing is: there really IS no conflict: all MPs are lobbyists in the german parliament and they don't even have to declare their money sources. He got criticized because his dual role made the way german democracy is constructed so very visible: A people with almost no democratic rights (representative democracy) and an allmighty elite of lobbyists in the parliament. Another jewel of german politics is Reinhard Göhner. Head of the german employer lobby and MP he has never been in a conflict of interests . Sure always on the side of his lobby, never on the side of the people he should represent as a MP.). I just love the way our lobbyists are trying to hide the fact that as members of parliament they are always in a conflict of interests with their lobby: they try to reduce this conflict to the question of "can you do two jobs at the same time? - as in daily hours or travel time..." As if this were the question. The head of the german CDU Kander must really think we are complete idiots. But given all that the way Angela Merkel appeases Bush and the Isrealis sets a new record even for german politics. German Chancellors seem to have a weak spot for dictators and criminals of war: Schröder had Putin as a friend who is famous for his brutality in the Tchechnya conflict.

I guess it is time to talk straight: to call Guantanmo what it is: A KZ (concentration camp) where people are held without any form of human rights being accessible to them. A thing the Nazis would be proud of.

And its time to say that the US are aggressors also against us. Their secret services are conducting their dirty business within the EU just as well as outside. They disrespect our data protection and privacy laws and use economic force to get those data (airlines, SWIFT, Transportation companies). It is time to recognize that what we once heard as so called communist propaganda has been the truth: the US don't give a shit about democracy and human rights outside their border - and if Bush and his crooks can get their way much longer it is questionable what happens inside the US.

And it is time to say that Israel took the land from the palestines illegaly and has been killing the palestinians ever since. Being german does not change historical facts. If more people would say so - and stop doing projects with Isrealian companies e.g. - this might force Isreal into a strategy of piece. Even against the US strategy of war in the middle east.

So what are the good news in this whole mess? I noticed more of my students wearing t-shirts with a clear political statement: the US aggressions against other nations during the last 60 years are listed for example (this is a VERY long list). And the question is raised why acts of brutality against civilians are not called terrorism when conducted by the US. So there is hope yet. And we computer science people might not be so blind with respect to political and social issues as ohters believe.

- A politically correct - distributed - search engine, more

-

In the context of the Lebanon war I had some rather disturbing feelings about the way the war is covered by some media. It is not the generally very tame way every aggression from Isreal is handled by the western media. The media seem to never really explain the context of military actions: That Isreal does everything to prevent peace in this region - it would stop them from acquiring more land. This is pretty much a given: no critical background analysis. But I noticed something strange during the last weeks on the way even the news themselves seem to get filtered: google-news usually does a good job of giving me an overview of the days events. In this case though it does not report a lot on this new war. After a few days media coverage on the Lebanon war was dropped either completely or the news went very much down on the page. This may be coincidance but it made me think about the role of search engines again.

Google, Microsoft and Yahoo are well known for putting money above morals when it comes to business deals with China, offering data to the CIA and so on. Could we build an alternative based strictly on peer-to-peer technologies? The hardware base of large search engines surely is impressive but it is dwarfed by the overall amount of information processing capabilities present across homes. Not to forget the HUGE bandwidth available there if we take all the ADLS, ISDN and modem connections together. Seriously - emule and ohters do a fine job in finding files. What stops us from building a completely distributed search engine?

In one of our software projects Markus Block and Ron Kutschke built the so called e-bay killer: a p2p applications called tradingcenter (find it on jxta homepage) that allowed distributed auctions to be conducted. It included security concepts. Could we build a distributed search engine based on federation and social software? Could the success of wikipedia be repeated in the search area?

- SOA in the INTRANET - an exchange

-

On the occasion of an experience exchange between two large scale enterprises several statements where made that seem to contradict many things which are typically claimed in the context of SOA and Webservices.

Just as a reminder: SOA and Webservices are supposed to

-

allow easy and flexible use of services across enterprises

-

allow different security and transaction systems to operate across company borders

-

reduce complexity by outsorcing

-

allow concentration on core abilities

-

XML everywhere allows for fast and easy interoperability across languages

-

Web Services security allows for federation of trust and easier B2B

-

make re-engineering of processes possible and allows a specification of IT services in BUSINESS Language terms

-

A top down business process modelling approach is well suited to get SOA started.

-

distributed business services are supported by new transaction models (like compensation, long running TAs)

-

and for the pure intranet afficionado: enterprise service bus technology will go way beyond EAI apps with respect to ease of data conversion, reliable transport and change support via pub-sub mechanisms.

The two enterprises had already started using service orientation in their intranets but their experiences where different to what the SOA PR claims:

-

absolute governance is a must: Without elaborated tools and procedures to ensure service compatibility nothing will work together

-

Absolute high-availability required: organisations become absolutely dependent on services being available.

-

Re-use of services increases dependencies and emphasizes the need of high-availability

-

Complexity increases as dependencies grow

-

life-cycle management becomes increasingly hard and costly

-

Webservice use increases only at the border of the enterprise. Their use requires lots of manual tweaking due to incompatibilites etc. in the area of security.

-

Web service security will probably mostly consist of a XML firewall

-

XML data conversions are VERY costly

-

Remoting is VERY costly and SOA design and architecture is DEEPLY influenced by the choice of remote interfaces and locations

-

Workflow and process management require deep organizational changes and there is no business case for it or the organizations are not ready for it.

-

J2EE and .NET interoperability is possible but cumbersome to do.

-

Bottom up component and service definition is quite possible too.

-

Atomic transactions are still the dominant way to ensure data consistency in intranet environments

-

At least two, sometimes three or more versions of a service need to be maintained

and the Enterprise Service Bus:

-

not used yet due to performance issues

-

unclear semantics of data

-

hidden complexity

-

high cost of conversions which need to be avoided by all means

If governance is so critical, how will service design work across companies? Dito for life-cycle issues, complexity etc. How will webservices gain support if they fail exactly where they should shine - at the company border? Remoting and XML processing seem to be intransparent for the architecture - how will it be reflected in SOA analysis and design?

Things we need to learn more about:

proper interface design for SOA services proper ways to do domain decomposition proper ways to communicate interfaces and to achieve a collaborative effect (avoid redundancies) how to align remoting and XML conversion with service decomposition Now for a nice contrast: Mashups - ajax driven aggregation of information at clients, using simple web interfaces like the ones from amazon and google. Why are those aggregations and re-use kind of emergent, self-organized efforts? What makes them different to SOA approaches? Perhaps it is the intranet perspective that makes SOA and webservices look bad because they require different business models. Mashups are pure internet community things - social efforts per se. Using those technologies to the fullest extent would mean to re-create the internet climate within companies: free, collaborative, non-hierarchical ways to interact. Is this going to happen?

-

- Security Madness

-

Another bad day for civil rights and real democracy. The german parliament is discussion new legislation that would give secret services (yes, we have several of them and they are well known for violating civil rights (or attacking prisons with bombs in Celle e.g.). If you follow the ARD Report to the Interview with Hans-Peter Uhl (CSU) you will find this gem of polit-speak: “ Uhl: Das Gesetzespaket, das damals gemacht wurde, hat sich bewährt. tageschau.de: Können Sie Beispiele nennen, in welchen Punkten sich die Gesetze bewährt haben? Uhl: Die überwachung der terrorismusverdächtigen Szene hat konkrete Anhaltspunkte über Gefährdungslagen gebracht. Die haben dazu geführt, dass die Sicherheitsorgane tätig werden und Festnahmen durchgeführt werden konnten. Möglicherweise konnten auch Vorbereitungshandlungen für mögliche Anschläge, sei es im Inland oder Ausland, unterbunden oder gestört werden. tageschau.de: Können Sie ein konkretes Beispiel nennen? Uhl: Mir ist kein Fall bekannt.(sic!!!!, WK) Aber ich kann nicht ausschließen, dass es Fälle geben hat, wo Anschläge oder deren Planung aufgrund von Verfasssungsschutz-Informationen verhindert worden sind. tageschau.de: Warum sollen die Befugnisse der Dienste ausgeweitet werden? Uhl: Diese Maßnahmen sind gerechtfertigt und unablässlich. Ein feiger, terroristischer Bombenanschlag lässt sich in einer mobilen Gesellschaft, wie wir sie vor allem in Großstädten leben, nicht mit letztendlicher Sicherheit (sic !!!!, WK) verhindern. Das bedeutet, es gibt nur eine Chance: Man muss während der Vorbereitung der Handlung den Tätern mit nachrichtendienstlichen Mitteln zuvorkommen (sic !!!! WK). Wenn der Täter mit der Bombe unterwegs ist, dann ist es in aller Regel zu spät.”

So he has not a single example of where the snooping-laws (from 2001) really helped but he wants more of them. And the rationale is quite simple: because terrorism is hard to prevent the security forces need to be pro-active and start snooping even earlier.-

Ok. So murder is also hard to prevent - every day people get killed. This did not lead us to monitor children, to do DNA analysis on innocent youth or to track anybody in this society without cause. I believe the murder argument shows the bureaucratic madness of the terrorism argument.

But the bad day is not finished yet. Have you heard about the Karlsruhe conference on security research - with a keynote by Anette Schavan, former secretary of schools in BW and now responsible for research in Berlin. The conference deals with technical means to detect bombs, explosives etc. Take a look at the toys of those researchers here: Future Security - Program. It is depressing to see that security is only seen as a technical problem. Every political dimension has been removed from the discussion.

The szenarios presented at the conference are quite interesting:

loss of energy infrastructure panic between people of different cultures at one place !?!? loss of internet functionality Bruche Schneiers recent competition for the craziest terror scenario comes to mind....

- SOA in your Enterprise - by IBM Global Business Services. A seminar here at HDM, 10. November 2006

-

Everybody in IT is currently doing something with SOA. Either analyzing, implementing or running it.

Everybody in IT is currently doing something with SOA. Either analyzing, implementing or running it.

What makes SOA so interesting for enterprises and why just now? What are the challenges with SOA that companies have to solve? How is a SOA strategy successfully implemented? Examples for successful implementations? What are the core technologies behind SOA? Consultants from IBM Global Business Services have created the following agenda. Their goal is to present the core topics on SOA and to answer the above questions. Agenda:

-

What make's SOA special - the business view

-

SOA technical architecture

-

Security in a SOA environment

-

SOA Governance

-

SOMA - the methodology for SOA development

The presentations are ideal for people who need to make decisions on SOA in the near future of their enterprise. You will find more details for this upcoming event shortly here and at the homepage of HDM Stuttgart If we should send you more information or in case you would like to register early you will find a link here shortly.

The event is free of charge but an early registration will secure your place.

-

- Games Day: We have gained a level

-

Not being a games developer the day was quite educational for me. Here is some of the things I learned today:

There is no recognized theory on the effects of games on children and adults. As always in social effects it depends on the circumstances and environmental forces as well. A one-dimensional explanation misses many things like the development and practice of socail skills in multi-player games. On the other side the results e.g. from Christian Pfeiffer on the effects of early media exposure on the success at school warrant a careful dealing wiht media at the side of parents. There is lots to cover in future versions of the Game Day and we should keep the focus on technical AND social aspects fo games. The presentation by Prof. Susanne Krueger met a public that picked up quickly on those controversial topics and before we realized it we had already managed to be one hour behind our schedule...

As far as addiction is concerned games seem to cause the same problems as everything else: humans simply seem to be addicted entities anyway - just the drug varies and your craze may not be mine (;-). Some gamers reported problems that friends had to manage a responsible way to deal with games - especially multi-player games with their potential for group pressure. But the same goes for online casinos, phone sex etc. I guess.

Michel Wiekenberg started the technical session with an introduction to modelling with meshes and textures much to the benefit of the not so game-savvy public. Then Valentin Schwind took over and my - is game modeling complex and difficult. He used 3dsmax to demonstrate the various effects and endless ways to configure and model assets and it became clear how difficult this program already is. For professional development plug-ins are created to provide game specific services and to maintain a clear modeller-programmer boundary while putting as much as possible into modelling.

Valentin Schwind shocked the public when he told us the time it takes for newcomers to get familiar with advanced modelling using 3dsmax. But there are also many features in this program that use symmetry e.g. to reduce modelling effort.

Andrea Taras should us ways to map textures to wire models and to do this in a pleasing way. It is clearly more than just techniques. To achieve pleasing artistic results one should have real drawing experience and she suggested a course by our colleage Susanne Mayer where she is currently learning how to draw. The good news was that texture mapping is not always so difficult and even beginners can achieve nice results soon.

After her talk my Colleage Jens Hahn - our graphics, interactive media and virtual reality specialist gave a talk on current developments in graphics rendering. He showed advanced shading techniques (not to be confused with simply crating shadows (;-)) using programmable graphics hardware based pixel rendering and fragment processing (applying the textures information). According to him the fact that those processing steps have become programmable hardware modules is responsible for realistic rendering in realtime.

From a software architecture point of view I enjoyed to see the graphics rendering pipeline as a nice representative of data flow architecture and communicating sequential processes (CSP) - one of my conceptual topics this term. This type of architecture scales extremely well in the context of huge amounts of data and keeps the processing algorithms smples (no locking etc.). Jens shoed Nvidias C for gaphics as a language used to program the hardware modules.

Then came the second of the Wiekenberg double pack: Markus Wiekenberg demonstrated in a much acclaimed talk the development and use of a dialog editor for a playstation 2 game. At least for non-gamers like myself it became obvious how games really are created and what a huge role the correct use and even develpment of tools plays in this area. The dialog editor helped game creators to create realistic dialogs by letting them change camera positions easily. The editor helps accessing and composing the endless amounts of asset data kept in databases and even tries to support different language versions (yes, it is difficult to map different languages to the same scene lenght). It was amazing how the combination of pre-configured animations and configurable effects like laughing, getting drunk etc. were able to create quite realistic dialogs.

Markus Wiekenberg had developed this editor and did not cease to emphasize the importance of usability in this project: only when the UI meets the expectations, knowledge level and working style of those designers will the increase in productivity be realized.

The software related talks where continued by Christoph Birkhold who reported experiences from this latest game development: desperados 2. He showed many scenes and explained the technology used to create them - ranging from the core architecture to the inclusion of a physics engine. He explained that whoever wants to integrte such an engine to increase the realism of scenes should do so as early as possible in a project. The reason is that there are many conflicts between physics and game logics and it can happten that the decisions of the game physics engine threatens the playability of the game: What happens if physics decides to put a wreck doll at a place where games logic says no?

After these two talks from professional game programmers two MI students: Thomas Fuchsmann and Stefan Radicke - they run our own games tutorial at CS&M - told about their own game projects and showed several scenes. It started with their 3rd term project "FinalStarfighterDeluxe" (their little starship became the logo of our game day). And it ended with their current project: the development of a 3D game engine. Since they started development from scratch they are in an ideal position to teach game programming in their tutorial.

Last but not least Kerstin Antolovic and Holger Schmidt from Electronic Media at HDM presented their mobile game. Programmed in Java on J2ME they told us about the problems with incompatible implementations of Java on various mobile phones and the difficulties of bluetooth programming. Their game had to deal with minimal computing and storage resources and still included several different modes ranging from timed attacks to multi-player environments. Despite of the difficulties the possibilities behind mobile games are ming-boggling: image some 80.000 people in a stadium all equipped with bluetooth phones...

This excellent development project also showed that more mixed teams consisting of electronic media and computer science students are needed.

The Games Day ended with a short discussion of future versions of this events. The whish list included e.g. specialized tutorials for and by industry experts, programming competitions, multi-day conferences etc. We will conduct a Games Day wrap-up soon where we will discuss the results and start planning the next one. In the meantime we should also intensify our relations to our partner university at Dundee, Scotland. They seem to specialize in games and multi-media devellopment.

Looking at the large number of participants the Game Day obvioulsy was a success. But did we gain a level at CS&M? I think so. The Games Day technical presentations came from our own students, alumni and their friends to a large degree. It shows a high levele of technical competence and an excellent motivation to turn games development into a permanent topic in our faculty.

- First HDM Games Day - Program

-

This is the agenda for our first day on games (computer, consoles, mobile) and games development. One of our goals is to introduce students to the world of games creation. Another goal is to bring us in touch with specialists working in the game industry and start a discussion on the future of game development at HDM. We hope that the Games Day will serve as a kick-off for future events which might even spread over several days.

This is the agenda for our first day on games (computer, consoles, mobile) and games development. One of our goals is to introduce students to the world of games creation. Another goal is to bring us in touch with specialists working in the game industry and start a discussion on the future of game development at HDM. We hope that the Games Day will serve as a kick-off for future events which might even spread over several days. Electronic games have grown into a substantial industry making millions with online games, strategy games and adventures. But there is more to games than just economics. Today those games - especially the online and multiplayer variants - are having social impacts as well: From getting lost in cyber space to meeting lots of people and making friends online. This means that many different faculties at HDM have a common interest in computer games: design and arts, the economics behind game production, PR and markteting as well as information ethics and last but not least the architecture and desing of games as complex programs. The Games Day leaves room for discussions on the future of game development as well.

The First HDM Games Day provides an introduction to the world of game design and programming. Demos of games will give an impression of the current state of the art in computer games (or as the Gamestar magazine recently said: "You have gained a level"). The day is free and open to the interested public both from within HDM as well as from the industry. It will take place Friday 16.06.2006 at Hochschule der Medien, Stuttgart, Nobelstrasse 10. (see HDM homepage for streaming infos and last minute changes . We start at 9.00 in room 056.

- 9.00 - 9.30 "Böse Games?"

-

Susanne Krüger, Timo Strohmaier, Walter Kriha, HDM - open discussion

- 9.35 - 10.20 Game Modeling

-

Valentin Schwind, Michael Wiekenberg

- 10.25 - 10.55 Textures in Gaming

-

Andread Taras

- 11.30 - 12.00 Shading Techniques for Computer Games

-

Jens Hahn

- 12.05 - 12.45 Tool Pipline,

-

Markus Wiekenberg

- 11.30 - 12.00 Shading Techniques for Computer Games

-

Jens Hahn

- 12.45 - 13.30 Lunch Break

- 13.30 - 14.45 Framework Architecture and Game Physics with Demos

-

Christoph Birkhold

- 14.45 - 15.15 Discussion Round 1

-

All

- 15.15 - 15.45 Coffee Break and Demos

- 15.45 - 16.30 Games Programming Basics with Demos

- 16.35 - 17.05 Mobile Games

-

Representatives from Electronic Media (AM)

- 17.05 - Get Together

This is the list of topics we would like to see discussed at our second games day in the winter term:

Cell Process Architecture for Games IBM Lab Böblingen Audio in Games Amsterdam Game Engine Performance Problems in Games MMOGs and P2P Usability and Eye tracking in games development Security (DRM etc.) Game Economics It is quite possible that the next Game Day Event spreads over a couple of days. For a nice introduction to online games see the new IBM Systems Journal:

- The pipeline architecture in computer linguistics

-

In his introduction to computer linguistics today Stefan Klatt mentioned the pipeline as being the current architecture of choice for extracting information from documents. The pipeline allows modules to sequentially work on text fragments and extract information from those parts, e.g. break up the text into words (tagging) or enrich the raw text with meta-data like when sentences form a more complex argument.

Those modules can be rule-based or statistcal in nature with a combination delivering the best results with respect to recall and precision. The modules can use thesauri or other forms of lexica as well.

In his introduction to computer linguistics today Stefan Klatt mentioned the pipeline as being the current architecture of choice for extracting information from documents. The pipeline allows modules to sequentially work on text fragments and extract information from those parts, e.g. break up the text into words (tagging) or enrich the raw text with meta-data like when sentences form a more complex argument.

Those modules can be rule-based or statistcal in nature with a combination delivering the best results with respect to recall and precision. The modules can use thesauri or other forms of lexica as well.The art in building pipeline architectures lies in defining the shared data structure those modules work on. This structure must be extensible by each module as nobody can know which analytical processes will be available in the future. At the same time the modules must be and stay independent of each other. This means that an addition to the shared or common data structure cannot render existing modules incompatible. A high degree of self-description and meta-meta layers is probably required. At this point the Unstructured Information Management Architecture (UIMA) from IBM comes to mind. It can be downloaded from alphaworks and a very good IBM Systems Journal on the topic of unstructured information processing exists as well. It describes the framework and pipeline interfaces that IBM defined to connect modules. It also describes the Prolog data structure used to capture shared information. Due to this data structure and the processing pipeline working on it later modules can use the original textual input AND all the intermediate results of previous modules. This is a major advantage as it relieves the programmer of a module from the tedious work of getting input etc. And it also gives high quality results to later modules. On top of it the whole process is sequential and does not require error prone locking and synchronization primitives. But is it the best we can do? Just think about our brain seems to process language on the different levels of phonetics, lexicon, syntax, semantics and pragmatics. It looks like in our brain the different layers can somehow share information IN PARALLEL. Partial semantic results can help the word tokenizer to perform better. From a layered architecture point of view the mixture and cross-fertilization of layers is not desirable as it tends to create tight couplings between layers. Each layer should only depend on its lower neighbour. But from a processing point of view each layer should be able to use partial results from other processing steps as early as possible to improve its own results. How could an architecture look like if we want to achieve parallel sharing of partial information without ending in a nightmare of tight couplings or synchronization problems? How about creating a distributed blackboard (aka linda tupla space) to represent the common data structures? An example might help: you know perhaps the experiments done with texts lines cut in half horizontally? The human brain seems to be able to still read those words even at a lower recognition rate. A word tagger might have some problems to detect the proper words from OCR input. But syntactical and semantical analysis modules could provide early hints once the first parts of a sentence have been guessed by the tagger. It is my guess that some kind of cross-fertilization between processing modules is what makes the brain so powerful in the area of NLP.

Besides the processing architecture the talk by Stefan Klatt provided a very good introduction to the concepts behind computer linguistics and the methodologies used there. It is a fascinating area which combines computer science, linguistics, mathematics, philosophy and psychology to be successfull.

- Web Analytics, RFIDs and J2EE Adapters - free IBM Redbooks

-

Just a reminder: you can get free and good information from IBM Redbooks . The introductory chapters are usually not specific to IBM products and give a good overview on the topic. Here some examples. The web analysis book looks especially nice.

How to use Web Analytics for Improving Web Applications WebSphere Adapter Development IBM WebSphere RFID Handbook: A Solution Guide - Current Thesis Work at Computer Science and Media

-

After our perfect rating in Zeit/CHE - computer science and media at HDM turned out to be one of the four best technical (;-) computer science faculties - it is time to present some of the latest thesis work done at the computer science and media faculty of HDM.

The last two thesis papers show clearly the wide range of interests that our students in computer science and media have.

- Desktop Search Engines for the Enterprise

-

Dani Haag did a research oriented thesis at UBS AG. The task was to define criteria for the use of a desktop search engine on an enterprise scale. After a theoretical part where he investigated human search behavior and strategies he covered the topic of desktop search in depth. The results where quite intersting.

Desktop search is radically different from internet search: It deals with information that a user typically has already seen once and that she wants to find again quickly using criteria like the tool used to create it, creation time etc. Desktop search needs to serve different user groups and intentions ranging from specialist looking for a very specific piece of research documentation to secretaries trying to locate a memo. Desktop search can save a lot of time. Just imagine 30 minutes of search time per person per day in a company Desktop search needs to be federated in an enterprise environment: other search indexes need to be used. This is especially true for network drives. An update of a public piece of information on a shared global network drive would result in thousands of local search engines updating their indices. And this would simply kill the company network for three days given a large enterpise. Desktop search needs to be extensible with respect to new formats. And last but not least desktop search is also a security problem. Google e.g. hooks the windows API and gets access to e-banking information etc. Google reads this information before it enters an SSL channel. Caches are also not safe from desktop search engines and users need to be very careful to avoid confidential information from showing up in indices. But the most important feature of a good desktop search engine clearly is good usability. Here the products from Microsoft and Google seem to have an advantage over smaller companies that may be in this business for a longer time but failed to develop easy to use interfaces. All in all there is little doubt that the future of local machines will include a desktop search engine as the amount of data per person is still increasing. This includes mail, internet and application data.

- Central User Repository and Business Process Re-engineering

-

How expensive can it be to introduce a new employee to a company? The answer depends on the structure of the company (distributed vs. centralized), the applications and the infrastructure provided. Marco Zugelder thesis covered the creation of a centralized user repository, together with the business process analysis needed to improve the process of hiring new employees. The result was the design of an LDAP database with interfaces to different kinds of application and a strategy of how to incrementally move towards this centralized repository.

During his business process re-engineering work he studied existing processes at one of the largest computer hardware suppliers for businesses in Europe. He used the BPMN notation to descrive the processes found. But he also quickly realized the the organization of change would be a major issue during the business process analysis. The investigation of current practices and processes raised some questions and suspicions with the employees which could seriously impact the project. Markus Samarajiwa's talk at the last IBM University day came in quite handy here: How to deal with organizational change problems.

- Autoconfiguration of home networks with semantic protocol definition

-

The home of the future will not be configured by its users - at least not completely. The complexity of updates to various devices is just too high to be handled by the end-user. This means that application service provides will have to do the maintenance work, e.g. updating the software on dish-washers and heaters. Unfortunately most devices have different configuration values and protocols. This was the starting point for Ron Kutschke at Alcatel. His task was to find a solution to this problem and following ideas from out long term partner Dr. Stefan Rupp at Alcatel he decided to use a semantic approach: He designed an ontology for the definition and description of a generic configuration protocol based on OWL-S. In a second step this generic protocol was then mapped to specific configuration structures and transport protocols.

This interesting work which resulted in a running prototype has been put in the public domain and can be downloaded here: Thesis Presentation Appendix (technical) Software (source). Let me know if you have problems accessing the software or thesis. To me the thesis is another proof for the increasing importance of semantic technologies in service oriented architectures and we have currently a number of thesis work at HDM in this area.

- On the Effects of Web Banners on Reception and Behavior

-

Sanela Delac did her thesis work with our usability specialist Prof. Michael Burmester. Using an empirical approach she investigated the effects of banners on reception. Different theories existed on this topic, ranging from users don't even recognize those banners anymore to them still having a measurable effect on browsing behavior. She used the eye-tracking equipment of the usability lab to get hard data on reception and - together with a questionaire - did a statistical analysis. It turned out that the effects of banners heavily dependen on the browsing mode of the users. A user with a strong focus on something rarely recognized the banners. Users who just wanted to do some browsing without specific goals were much more influenced by banners

Besides the statistical and computer science parts Sanela also had to get familiar with the psychological basics of reception, alarm signals and peripheral detection of input. The thesis worked out nicely and confirms once more that our students in computer science and media are able to work in very different fields just as well - combining their technical know-how with other sciences.

- Electronic Waste - Heaven Sent?

-

Matthias Feilhauer did a very nice thesis on the way industrialized societies currently deal with electronic waste: by exporting it into the so called third world. He was able to show that this export is very much a mixed blessing. On on side those countries get money and raw materials at a cheap rate. On the other hand they suffer great environmental damage during this process.

Thanks to our friend Prof. Rafael Capurro he was able to get in touch with specialists from GLOBAL 2000. The thesis finally was written in Vienna.

What caught my attention in the thesis was the term "throw-away hardware". Apple Ipods and mp3 players in general are just one kind of hardware that will never get repaid. It will be exchanged and thrown away. There are tons and tons of old or broken mobile phones and players waiting to be recycled in the third world.

Surprisingly little data exist on the quality of the recycling processes there.

- Computer Games Workshop at CS&M

-