Welcome to the kriha.org weblog

- Karl Klink on High-Quality Software on Mainframes

-

As many large companies are currently considering (or are already in the process of implementing) a move back to mainframe systems this presents an opportunity for our students to learn more about a promising technical area. Something they cannot learn from the current staff at most universities as these professors have not worked on those machines for decades.

Mainframes are also a very interesting way to run a universitie's computing tasks with low overhead and low system administration costs. Just think about hundreds of virtual linux instances which can be created on the fly. Students would then use their notebooks to connect.

But mainframes frequently require a higher code quality than what is usually achieved in "office-like" products. Karl Klink is a well-known expert in the area of high-quality software development and also the father of Linux on the IBM mainframes. This "skunkwork" project turned into a vast success over the last years and at the same time makes the mainframes accessible to a new generation of software engineers.

- New technologies for secure software

-

One of the most exciting areas of security seems to be grid computing. The globus toolkit uses so called proxy references to achieve a secure way to delegate user rights across different services. This is the first time I see a delegation mechanism that does not require the client to offer some form of token that allows the uncontrolled creation of further access tokens on server side. Bad examples are delegating userids and passwords and the kerberos way of shipping a TicketGrantingTicket from a client to other servers. True - it is only a TGT but it can be used in unintended ways on the server side.

Security Extended Linux (SELinux) is a very interesting way to restrain the almighty user/program alliance from causing too much damage. In other words: the typical access control lists of operating systems allow a program to use all the rights of a user to achieve whatever it wants. A user cannot put restrictions on her rights. The objects protected by ACLs to protect the entry to their services but then take over a users rights completely. A nice example of this problem is shown in "how many rights does the cp program need". Or the discussion of "the confused deputy problem" on Confused Deputy Problem . SELinux does not use the usual answer to this problem: capabilities. It uses the sandbox model instead by putting programs and objects into domains and restricting the actions which can be perfromed in a domain. I will discuss the new book on SELinux shortly.

Another interesting software architecture is provided by OSGI - the open service gateway interface. It uses a sandbox model to restrict services downloaded to embedded platforms. This technology is used e.g. in automotive industries. The Java based security architecture follows a concept like it is used in smartcards (see the finread architecture) using isolation. But unlike finread the downloaded services do not get permissions through code signing. Instead - separately configured permissions need to be defined as specified by the Java2 Security Architecture. See the interesting article fromm engineers at BMW (:.==). The reuse of packages between services seems to be an unsolved problem here. Expect more to come on OSGI security.

The mainframe is back, alive and kicking. I will make an announcement for a very interesting talk on mainframe software shortly but here I'd like to mention an excellent new redbook from IBM on Websphere Security on Z/OS. This redbook covers the complete application server security and its integration with mainframe internal components and external systems. At a handy size of merely 786 pages it is a quick read (;-).

The Grid computing groups suggest a system for inter-domain security assertions. The problem of mapping users, rights and attributes between virtual organisations and existing companies can be solved in different ways but the typical approaches based on setting a complete federation system on top of the existing infrastructure seems to be hardly workable. Other solutions describe a SAML like assertion language (or abstract syntax) which allows a user to be mapped to another identity on a receiving system. The system uses semantics and interacting services to create those assertions. The assertions themselves come partly from the sending and partly from the receiving organisation but no super-structure is required.

- Developments driving security today

-

In a recent report on future security trends Gartner group notices that the perimeter model of security borders is no longer sufficient. This has been noticed by others like Dan Geer in his remark on where a companies borders today are (they are exactly when a company has no longer the authority to enforce a key). Garter Group created the "airport" security model" which knows different security zones with different QOS, trust and technical relations.

But I believe the future of security technology will be even more different than that. The concept of end-to-end security based on trust coming from central repositories will have to go. Channel based security is less effective and will be replaced by object based security - as is proposed by e.g. webservices WS-Security standard. But

One driver for security is the demand for ad-hoc formation of virtual organisations - across different existing organisations with their respective infrastructures. This is not the first time that this demand has been noticed, e.g. 10 years ago at the UCLA a project called "Infospheres" created a framework for ad-hoc collaboration between organistations (e.g. in case of a catastrophic event). Today Grid computing is covering this area and creates new technologies (see above). In general the lack of central security repositories (which is almost equal to loosing the "domain" or "realm" concept raises big problems for current security technology.

Another driver is mobility with its associated host of small devices running some form of embedded software. This can be smartphones, car-entertainment systems and so on. Most of these devices either forbid dynamic updates of the software or pose a big security risk. But the fact is that most operating systems using the typical ACL model of access control are unable to deal with downloaded code. It requires either sandboxing or a capability based system to allow safe download of code.

Take a look at the video on POLA by Mark Miller and Mark Stiegler (get it via the erights.org homepage.). It uses the SkyNet virus from Terminator III to explain the principles of POLA and also covers ideas for a secure browser.

And after watching the video look at the latest threats like credential stealing via popups or the good old session stealing through web-trojans while you have a session running in a different browser window. Unfortunately I am not a big expert in browser design but the way browsers combine a global object model (DOM) with an interpreter engine looks like a barely controlled disaster waiting to happen. The web-trojan/session stealing bug is not a bug at all. Instead, web authors need to prepare their pages by inserting random numbers to tag them. When a page without a valid random number is requested the web site can be sure that the link was not delivered through itself. And the popup-stealing trick is the same that has been used with frames: Code from site A is effective in a connection to site B. And the fix will only fix this particular problem. A real solution could be to create a "closure" with a specific DOM part, an interpreter instance and a connection - thereby restricting the browser from mixing sites. Look at the DARPA browser analysis in this context.

- Java vs. .NET - impersonation and delegation on different platforms

-

Denis Plipchuk's e-book from OReilly is not only a good comparison of the security mechanisms used in both platforms. It is also a good introduction to the problems of impersonation, delegation and how these mechanisms are interwoven with the platform technology. Plipchuk distinguishes several layers of platform security: User, machine security, enterprise, machine configuration and application configuration which is a good conceptual framework.

Here is what I have learnt:

-

Both platforms get more and more similiar through the use of Kerberos, GSS-API, provider based security mechanism, PKI support.

-

Only .NET additions and extensions are able to bring more security to the windows platforms.

-

Web services security is still a moving target.

-

.NET does support impersonation only in windows-only networks and has no support for delegation across the internet at all.

-

What surprised me was the many facettes of security available on MS platforms and the huge rule of IIS in the context of authentication and impersonation.

There is too much in this pdf file to list here but the content is definitely worth reading. Btw: the whole series of articles is available on the web but if you are busy I'd recommend buying the complete pdf at a low price of 5 dollar.

J2EE security (even with EJB3.0) is far from perfect as this little piece from Michael Nascimento Santo's blog shows (the problem with credentials from JAAS not available, the problem with dynamic instance based security etc.)

-

- Blog to find a job - an effective reputation system

-

Blogging certainly has gotten quite popular. But is it simply a public diary for people with a slightly exhibitionistic nature or can it provide real value for others? One talk at Open Source Conent Management event number 4 in Zurich (more details to this event will follow) offers an interesting idea: Use your blog to find a job.

Sounds funny at first glance but when you look at the properties of a blog one can see that employers might get some real information from a persons blog:

-

A blog which is current shows a certain level of activity and engagement on the side of the prospective employee

-

A blog which is current shows that the author is up to date with respect to latest technology

-

A blog - like most diaries - contains information on personal habits, emotional skills, goals etc. which are otherwise hard to evaluate during a short job interview.

-

Du to the cross-referencing inherent in blogging the bloggers social and communicative involvement with a community can be known

-

The cross-referencing of blogs serves also as a reputation system that is rather hard to fake and which gives credibility to the other informatin extracted from the blog.

Taken together these things should cause students to become active bloggers if they want a decent job afterwards. But there are also critical things in blogging which came up in our discussion: The granularity of the entries is a problem. Once you start discussion something the entries turn frequently into some kind of "micro article" which is at least 15-20 lines long but can be much longer. This disrupts the flow of a blog.

Based on a real-time event (blogging from Bagdad while the US-bombs are falling) blogging seems to be most efficient. Reporting on ones daily routines can be quite boring on the other hand.

Finally: a blog is a personal store for information and the social connectivity through cross-references is just a side-effect. But that is OK I guess.

Support for collaboration at the workplace and privately has improved a lot - at least the technical means are now much better. Most good projects run some kind of wikiwiki for everyday communication and storage (or simply as a way to survive in restricted Intranets which outlaw ftp etc.). Chat is an invariable tool for development groups and should be integrated as a plug-in in eclipse. But what is still lacking is a culture of communication in many projects and that is quite hard to establish.

-

- Kerberos - network authentication middleware

-

Authentication across networked machines is still a hot topic. The MIT Kerberos system has become a standard way to authenticate users across networked machines and to achieve single-sign-on. Jason Garman's book "kerberos - the definitive guide" is very helpful in explaining the principles behind kerberos and how to use it as a security tool. I found the book to be extremely readable. The author explains the shift in threat models from host based to network based very well. His explanation of the security protocol underlying a kerberos implementation is good to understand and still precise. Readers learn how Kerberos protects their secrets by generating and using session keys.

The chapter on attacks against kerberos (e.g. a man in the middle attack made possible if authenticating servers do not use the full kerberos protocol to validate client credentials) was very helpful.

The book is very helpful if you plan a cross-platform installation of kerberos on windows and unix machines. But it also shows scalability problems in the area of system management with kerberos. Especially if cross-domain trust is needed. And the fact that kerberos does not deal with authorization is one of the reasons why windows/unix integration via kerberos is still a cumbersome process. And the problems of using passwords as credentials are also explained.

Many companies (both software providers and users) are focussing on kerberos as the protocol for single-sign-on. Public-key infrastructures look much better initially but the key handling problems behind PKI still prevent a large scale use in many cases. To many companies the concept of central key distribution centers are quite natural.

Kerberos integration via other protocols (PAM, GSSAPI etc.) is an important topic but I had the feeling that this would almost warrant another book as the problems behind are very complicated.

All in all I can only recommend kerberos and Jason Garman's book on it.

- Web Application Performance - no silver bullet

-

I spent a couple of days trying to speed up our new internet application which uses a lot of XSLT processing (a model2+ architecture). While this is not generally a problem because the resuling pages will be cached it is a problem for those pages which cannot be cached. The results I have been seeing where that every additional request to uncached pages resulted in a new generation run which extended the response times for ALL those requests.

In other words: the relation between number of parallel requests and average response time is linear and leads to unacceptable response times after around 5 parallel requests. First we thought that this behavior must be the result of a bug, an undetected synchronization point, a wrong servlet model etc. The general hope - short before deployment - was for a SILVER BULLET: to find ONE CAUSE for the performance problem. And for a short moment it looked like I found one: a method with an unusally long runtime value in the profiler - not counting the sub-methods. But a friend soon pointed out that this might only be the effect of the garbage collector. Sadly, this was quite to the point.

Unfortunately, the silver bullet case is not typical, especially not with web applications. Some short considerations made us realize that the behavior described above is simply typical for CPU bound processes/threads - and XSLT transformations are very CPU intensive. But until we realized this we also learned a lot of other areas which participate in bad performance: Application Server settings, garbage collection choices, transformation architecture. And we started evaluating other xsl compilers even though e.g Gregor crashes with our style sheets.

What we don't want to do is to compromise our architecture but we might be forced to split some stylesheets into a pipeline of smaller ones.

Peformance measurement tools for our application server have been far from optimal yet: With WSAD profiler (used remotely against a small Unix box) I have to restart application server, profiler agent and my profiling client after every short run. (At least the kernel settings are now correct so that restart is a bit faster). Still, I could not get the resource advisors going.

What I learned from this case again was that performance testing needs to start early on the real hardware and that it is a tedious process which takes much longer than expected.

Starting Monday I will go through our memory allocations and check how the GC works. Perhaps I can squeeze out some more performance..

- The things we don't tell our wifes...

-

are usually not what we do at the dentist. That's why this headline on a full-page ad by the german dentists (actually - which organisation is behind this?) caught my attention. Turns out its an ad against the planned health card which is supposed to also contain medical statements and history in electronic form. Protected by signatures from medical professionals.

The argument of the dentist organisation goes like this: There are things we don't want to expose to medical professionals (or our wifes). To only partially expose medical details makes the whole electronic health file quite useless. But the dangers for our privacy are enormous. Doctors and patients should not be "transparent" (in german it says "gläsern meaning: like glass)

Let's look at the arguments line by line.

-

Since when does a german medical organisation care about the privacy of their patients? As far as I know no doctor or dentist would earn one penny by protecting the privacy - which makes the whole effort already quite suspicious.

-

What could be so secret in going to a dentist that I would not tell my wife about? I tried my best but except some phantasies about big-busted assistents bending over the dentist chair .. I've come up quite empty handed. If you got any idea please let me know. So why is the DENTIST organisation fighting the health records in the hands of patients so much? Only a really mean spirited security guy would guess that this could have something to do with result tracking, avoidance of double and triple x-rays etc.

-

And: my wife could not read the data from my health card anyway: this would require either my pin or a medical health certificate from a person with a medical profession.

-

But for sure the most interesting argument is the one where the dentists claim that neither patients nor doctors should become transparent. In argumentation around security topics it frequently helps to change or substitute roles to get a fresh view on things. In this case we need to replace the doctor with e.g. our auto-mechanic. What would you say if he would tell you that your relation is based on "trust" and that all data are best kept by him. With respect to the bill and the repair work done you just have to trust him. He knows best.

This example makes us realize how the dentist argument works: it tries to confuse the distinction between a patients right to privacy (and if a doctor becomes herself a patient she can claim the same rights to her privacy) and the rights of a doctor WHEN ACTING AS A MEMBER OF A MEDICAL PROFESSION. There is a need to control those actions (e.g. to allow legal disputes of the quality of service or potential mistakes during treatment). Of course the one person to control these actions is the patient himself - if he got the data. And exactly those data should not leave the medical offices if it goes after the dentists.

Clearly, defining the authorization and access control rules for medical data on the health card won't be easy. But the dentists arguments against medical records under patient control are simply a way to fend off request for result tracking, quality control etc. If you go to prozahn - datenschutz you will find more suspicious arguments: according to the dentist organization the patient data are best kept at the doctor and not on a card. "Who else would be interested in them?". They try to discredit the ministry for health as having an ill-advised interest in patient data. Of course, as long as the doctors have exclusive control over the patient data real control and tracking is impossible.

Again, there are risks behind those data, e.g. when they would get into the hands of insurances or employers. But while the ministry of health is certainly a candidate for suspicion the patients should not believe for one second that an organisation of medical professionals has intentions which have anything to do with what the patients need...

So who really is behind the "dentists-ad"? It is of course the Kassenzahnärtliche Vereinigung", a lobby-organisation of german dentists. And at the same time an organisation which could be disintermediated through the health card: when doctors and health-insurances could directly perform the accouting without involving the Kzbv.

The health card is surely a political minefield.

-

- How to use the Websphere Profiler

-

The following is only a start into the area of profiling. Here the Websphere Profiler is shown which is based on the JVM Debugging API. Websphere itself has more profiling interfaces but this is another topic.

WASD and Websphere include support for profiling. This includes an agent that needs to run at the target side and a Profile Perspective in WASD that controls this agent. Profiling can be done in the WASD development environment or against a live application running in a real websphere application server. Only in the case of a real application server will the data on concurrency etc. be meaningful. Literature on application profiling can be found here: J2EE Application Profiling in Websphere Studio . And here a tool report on WASD Profiler. The best introduction can be found as Chapter 17 in the IBM Redbook on IBM Websphere V5.1 Performance, Scalability and High Availability. It covers on more than 1000 pages everything about load-balancing, availability and caching etc.

How to get the software:

-

Eclipse/WASD

Even if you only want to profile with eclipse you need the IBM Controller Agent up and running. It comes with your WASD. Go to the bin subdirectory and execute SetConfig.bat to register the agent. Go to your services view on Windows and check whether the agent is up and running.

-

Solaris

Get the Agent Controller for your platform and follow the installation instructions. If you need to do this by hand: The software comes as a rac.tar file which is zipped. It can be found on the IBM Websphere site. Unpack it in a subdirectory of the application server, e.g. /export/opt/5.0.1.7/. In the bin subdirectory open the file RAStart.sh and adjust the home directory path to where rac is installed. Execute RAStart.sh to get the daemon going. (Please note: if you kill the daemon it will take up to 4 minutes before you can run a new one.)

Install EAR file: The EAR that you want to profile needs to be installed on the application server. It MUST run properly or you will not get reasonable output from profiling (or you will not see the agent at all) If you only want to test remote profiling you can use the EAR that comes with the article from above. (see attached file). It is best to unpack the EAR and install the .war file only. In this case it is necessary to specify a doc root entry "MyEnterprise" when uploading the war module to websphere admin client. ("install application" item)

Change application server configuration: Use the WAS admin console to change several settings of the server which should be profiled. You will need to follow the instructions from Chapter 17 of the performance redbook (see above). ---- See "Profiler configuration for WebSphere Studio test environment" on page 765 for information on how to profile an application in the WebSphere Studio test environment. Profiler configuration for a remote process: The remote server should have been started with profiling enabled. To configure your application server for profiling, do the following: * In the Administrative Console select Servers -> Application Servers -> appserver -> Process Definition. Enter -XrunpiAgent into the Executable arguments field and click OK. * Go to Servers -> Application Servers -> appserver -> Process Definition. Select Java Virtual Machine from the Additional Properties pane. Enter -XrunpiAgent -DPD_DT_ENABLED=true into the Generic JVM arguments field and click OK. * Save the configuration and restart the server(s). * The next step is to attach to a Remote Java process for the server instance: a. In WebSphere Studio Application Developer, switch to the Profiling and Logging perspective. How to switch to the Profiling and Logging Perspective is shown in Figure 17-2 on page 765. b. Select Profile -> Attach -> Remote Process. This option can also be obtained by right-clicking in the profiling monitor. * Go to "Generic Profiler configuration (local and remote environments)" on page 767. ----

Specify library path for application server: WAS will try to find the library libpiAgent.so when you specify the above JVM values. Therefore it is required that you extend the LD_LIBRARY_PATH in the websphere configuration to include the profiling libraries. Use the admin console and go to Application Server->[your server]->Process Definitons->Environment Entries->LD_LIBRARY_PATH and add the path to the installed lib directory of the profiler (eg. /opt/rac/lib).Watch out for different separators on Unix (:) and Windows (;). On a newly installed machine it could be the case that you will have to create the LD_LIBRARY_PATH variable selecting "new".

Prepare Eclipse/WASD: Under Windows->preferences->Logging and Profiling->hosts add the name of the remote host. Click on connection test to check the connection. Always use fully qualified host names. Open menu "profile" and select attach->remote server. Select the one you want to profile. Switch to the profiling perspective and follow the instructions from Chapter 17 or the other links from above.

Fixing problems: Sometimes it may happen that no agents are shown when you want to attach to a remote or local agent. This usually is a sign for your application server having problems running the application. In those cases the agents seem to have a problem telling the profiler about this condition. The agents disappear instead from the profiling view even though they are still running and answer correctly to the connection test request. Here is what seems to work: * stop the application server you are profiling. * Stop the controller agent (by using ./RAStop.sh in the rac/bin directory) * Wait 3-4 Minutes and start the controller agent (by using ./RAStart.sh). If start fails, redo this step until it works (;-) * start your application server * go to the workbench/profiling perspective and attach again to your controller agent. If you want to know whether the agent is still running on the remote site or whether your local eclipse has an open connection to the remote agent there are two useful tools available. On a Unix machine use __lsof | grep 10002__ to see all processes having an open on port 10002 (the agent default port) and on windows download the windows tcp tracker which will do the same. Tip: if you have problems with a large application, try to profile the demo program from [http://www-106.ibm.com/developerworks/websphere/library/techarticles/0311_manji/manji1.html] first. It comes as a small EAR file and provides a showcase for profiling.

Performance related resources: News about carbage collection and performance in new JDK's and Design for scalability - an update

-

- OBSOC - Reflections on the software and social architecture behind the T-COM/Microsoft scandal

-

I have to admit that without Tagesschau.de and Heise.de confirming the story of the chaos computer club I wouldn't have believed this for one second. Reading the Datenschleuder article on the T-COM hack - hack is actually much to strong a label for how the security leaks where discovered - stressed the muscles in my neck to their limits from shaking my head. First some facts for the benefit of our international friends as most of the documentation provided is in german unfortunately.

Dirk Heringhaus published how he detected lots of severe bugs in the OBSOC web portal of T-COM. This portal is used by T-COM customers and personnel to administrate contracts, buy services etc. It also interfaces with many other systems within Telecom. Heringhaus discovered the following security failures:

Changing URLs allowed to work around authentication and authorization

Password handling for customers and admins was way below standard and allowed easy password guessing

Customers and Admins used the same entrance (with the same low level security)

Implementors of OBSOC held critical data (e.g. SQL-Server backups on private domains, including domain passwords)

It was possible to break through from web application space into windows domain space.

Those are the most critical TECHNICAL failures. The whole story gets much worse when one adds the way the companies involved dealt with the problems:

No or delayed reaction

Even in critical situations important messages need to go through the call center.

Only private contacts help to solve those contact problems

Solutions are provided using the "patchwork pattern" -fix a little bit every time.

This is the point where one could start pondering over the unhealthy alliance of two monopolies (Telecom and Microsoft) and the role and value customers play under such conditions. And the role politics play by not punishing those companies or by not making current EU laws also valid in Germany. Historically there are many sweet connections between the ruling social democrats and the former state-owned Telecom.

And if you want to have some fun - read the Microsoft brochure on web-services where they brag with the way OBSOC was implemented using .NET. I think the author would now rather bite his tongue off...

In any case: go to the download page of t-com hack to get the full details like role levels, network info and lots of T-COM and MS bragging.

But this would be a cheap shot (why be hard on a company who's latest IE fixes lasted exactly ONE DAY - from Friday 7/31/04 to Saturday 8/1/04 before it had to be revoked and replaced?)

Though tempting I will not go down this road and instead speculate a bit on the software architectures behind the security failures.

-

No central entry to OBSOC software?

Heringhaus told T-COM about a problem with a variable X in some URLs and it was sometimes fixed the next day. But other uses of X in different requests where not affected. This points to a specific software pattern, in this case a model 1 architecture that uses templates. Those templates to be secure need to run the same security checks every time they are called. A familiar problem with this architecture is that programmers tend to forget those checks as they are not enforced architecturally.

In the same direction points the use of the well known Microsoft patchwork pattern to security: fix only what you absolutely have to fix. No architectural considerations needed.

-

No context sensitive access control checks?

Changing an account number resulted in seeing data related to this - foreign - account. This can only happen if no context sensitive access control is implemented. As a customer with an account you will need the right "can read or write account information". This is quite normal method level access control. But it is valid ONLY in context with the restriction: ONLY YOUR ACCOUNT. How the implementors of T-COM and Microsoft could miss this one totally escapes me.

-

From Web to domain?

This is what I am not really familiar with because I always teach to NEVER EVER expose Windows domains/shares etc. to the internet as we all know that the protocols used here are not able to do this securely. Why the T-COM infrastructure allowed thisa again totally escapes me.

-

Authentication

T-COM customers use weak authentication. But should this be true for Admins also? Now finally entries for Admins are separated from global internet access of customers. Again - unbelievable that this was not the case till now.

Again, I can only recommend reading the information available at datenschleuder. But a last statement on the costs of security as depicted by Wilfried Schulze in his presentation on IT-Security - Riscs and Measures (sorry, in german). Schulze says that the price of security compared to the losses is small. Yes, if done the T-COM and Microsoft way: focus on convenience and drown everything in techno-speak soup a la web-services.

But fixing the problem of OBSOC will NOT BE CHEAP because obviously there is no security framework in place at T-COM. Otherwise this application would have never been deployed and reactions on problems would have been very different. Just think about the way user-ids and passwords where handled. And this is actually the part that is most worrying: a huge company that has no concept of security for their clients. No wonder T-COM and Microsoft consider themselves a perfect match (sorry, could not resist (;-))

To be fair: only now IT-Security personnel learns to ask questions about the software deployed. What requests does it expect? Parameters? Actions performed? This means that IT-Security people will have to stock up on software know-how and the software programmers will have to realize that just programming a solution is not enough anymore. Unfortunately both areas: IT-Security in networks and secure software development are rather separated and only few people can live in both worlds.

Let's assume your company has a security framework deployed that requires e.g. security sign-offs during various stages of software planning and implementation. Would your IT-Security experts have noticed the problems with state kept on client side without further checks? Don't be too sure! IT-Security in many cases today is still dominated by network security.

And finally: I don't think that the problems described result from using the .NET framework. But there is an astonishing gap between the high-tech concepts described in .NET and the basic security problems found in OBSOC. This raises the question: did the programmers even understand what they where using and doing?

- Naming Service and Deployment in J2EE

-

Naming Services have a profound impact on application and migration in J2EE - as I learnt recently the hard way. In 1997 I was involved in one of the first component broker installations and I still remember how we tried to separate development, test and production areas e.g. through the use of different name spaces (based on DCE cells at that time). Looks like the concepts are still pretty much the same. Clustering adds some more complexity to this with different name space standards for path information etc.

When today our backend connector failed to deliver data - something that worked flawlessly yesterday - I got the suspicion that the naming service involved might not work properly. The exception thrown indicated a failing name space resolve of our session facade.

That's when I started looking for some recent info on naming in J2EE and for a JNDI browser that would let me see what was really deployed. (I must say that our deployment is really modeled after the J2EE roles and I have little or no influence on deployed code once it left the development phase). Here is what I found:

The first surprise was that there are now three different levels of naming services: cell, node and server. Cell and node where actually quite familiar from DCE but now every application server is also a naming service.

Then I discovered that e.g. Websphere already comes with a namespace browser - actually a dumper - called dumpNameSpace. Search for it in help or go there: dumpNameSpace . You will find the tool in wasd/runtimes/basexx/bin and a shell script to run it. It prints out whatever it finds in a given naming service (specified through its port).

In our case when I ran the tool I noticed that some errors where reported when the dumper tried to follow URLs from the node naming service to the application server naming service. After a complete restart of the whole cluster the errors disappeard and our objects showed up in the application server naming service.

If you don't like command line batch tools get a visual namespace browser

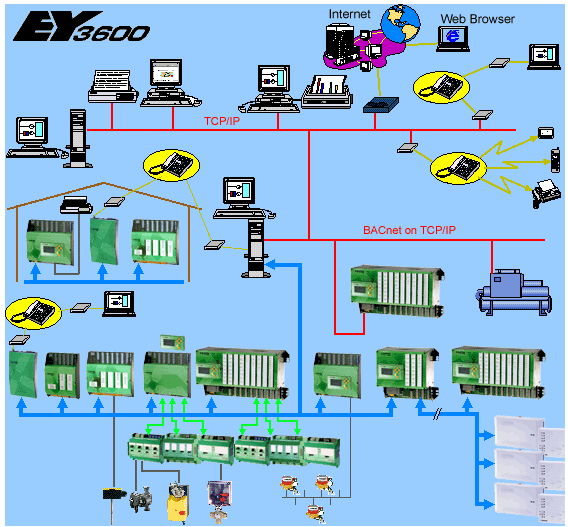

What certainly pays off is to get familiar with the concepts of clustering and name spaces as e.g. in Effects of naming on migration . If you are just interested in how the implementation of the naming service changed in websphere you can find the latest information here: What's new in WAS5 - Naming. The diagram from above is also from websphere world and it made things a lot clearer (e.g. that it is not a good sign if an application server does not answer naming requests...). And there is a good paper on Websphere clustering on Redbooks.ibm.com , together with more information on availability and reliability.

- Highlights of last terms software projects

-

If you thought your private notes on your pda or smartphone were hidden from prying eyes: take a look at Bluetooth security hacks . For a nice example of model driven software development look at Strutsbox and for an overview of all projects (in german) go to the treatment list.

- Character sets and encodings - always a dreadful thing?

-

Get some good advice on character sets and encodings from my friend and colleague Guillaume Robert:

The main reason why character encoding is a mess, is the confusion people do between "what" they want to define and "how" they define it.

Example 1: what: a list of integers in the [0;255] range. how: with 0,1,coma in a binary encoding sample: 01010101,00001111,11110000

Example 2: Case of HTML pages what: an HTML page with characters from ISO-10646 how: with characters from ISO-8859-1 sample: abcéö ђ. Contrary to what people think, browser only render ISO-10646 characters: Browser rendering. With a HTML header such as <meta http-equiv="Content-Type" content="text/html; charset=iso-8859-1"> you just indicate that the HTML source is written with ISO-8859-1 characters Charsets. Since ISO-8859-1 is a subset of ISO-10646 and contains most of the characters you'd need, the source may only be written with no particular care. what: c'est l'été ! (in ISO-10646) how: c'est l'été ! (in ISO-8859-1). Things turn tricky when you need to display a character which does not exist in your source encoding (ISO-8859-1 is an old small set of only 256 characters). Then you'd use the escaping mechanism and reference the ISO-10646 character using its code.

Example 3: what: é,á,{Euro},{CYRILLIQUE DJÉ SERBE} (in ISO-10646) how: é,á,€ђ (in ISO-8859-1) how: éà€ђ (in ASCII 7bits).

Example 4: HTML entities For convenience in HTML, most common escaping have a user-friendly alternate syntax: é <=> é ђ <=> €. Extended ISO-8859-1 Some widely used characters are not part of ISO-8859-1 because they appeared later (Euro) or were forgotten (French "oe"). The [128-159] range in ISO-8859-1 is a reserved unused range. For convenience, web browsers map these characters in this range. Be aware that some nodes in your source generation chain may still ignore this range (SAX for example). € <=> €.

See also: chars.html and internat.html Hope it helps Cheers Guillaume

- Service Oriented Architecture (SOA)

-

Good articles on SOA can be found here: Developerworks on SOA. I am not sure about the role and effects of SOA but the hype is rather big right now. But I guess once Web Services are REALLY implemented everywhere (and perform like RPC but without the smell of RPC) SOA will be the next big thing. Or not (;-).

- Granular access control - what we can learn from multi-level databases

-

Multi-function cards like the proposed german healthcard offer a lot of information about patients, medication, treatment etc. A big problem with respect to privacy and user control is the granularity of the information: "File" is not really appropriate here as some information in a medical statement might be public while others need to be controlled by the patient. While reading through Charles and Shari Pfleegers book on "security in computing" I stumbled of the chapter on database security. I am not a database expert but I have some statistics background so this chapter was really quite interesting for me. Besides inference and aggregation problems where users can extract more information from database tables than they are entitled to multilevel database technology caught my attention.

The book lists the following forces:

-

"The security of a single element may be different from the security of other elements of the same record or from other values of the same attribute"

-

"Two levels - sensitive and nonsensitive - are inadequate to represent some security situations.

-

The security of an aggregate - a sum, a count or a group of values in a database - may be different from the security of the individual elements.

The quotes are all from page 343ff.

This is exactly like the same case as with health information on smartcards. It is a problem of granularity, integrity and confidentiality. The book lists a number of techniques to solve those problems, e.g. partitioning (does not really solve the problem of distinct views on the same data and introduces redundancy problems), encryption (integrity locks which blow up data storage needs considerably) and trusted front ends (an intermediate which performs additional access controls like a filter but needs to throw away a lot of information). Other alternatives are commutative filters (like method control by containers in J2EE which tries to use the backend functionality) or distributed databases (which in case of DB frontends tends to develop into a DB itself)( from pages 346ff.)

In case of finread smartcards we have intelligent front-ends which protect partitioned data. The combination of smartcard data is still an open issue.

The book itself

is a sound and well written handbook of computer security. It covers cryptography, operating system security, administration (with risk analysis). Software issues like virus technology are described as well. But it is not a book on software security. SSL, Kerberos are not explained in depth but the explanations on security problems are well worth the money. It gives you the basic know-how to then read something like websphere or weblogic security manuals. If you need a handbook on security this one is a good one.

is a sound and well written handbook of computer security. It covers cryptography, operating system security, administration (with risk analysis). Software issues like virus technology are described as well. But it is not a book on software security. SSL, Kerberos are not explained in depth but the explanations on security problems are well worth the money. It gives you the basic know-how to then read something like websphere or weblogic security manuals. If you need a handbook on security this one is a good one. -

- Richard Gabriel, Patterns of Software

-

Didn't know that this wonderful book is now freely available (took the reference from Jorn Bettins article on MDSD). Richard Gabriel is one of the fathers of LISP and tells the story of how an incredibly powerful technology did not make it in the end. Covers famous concepts like "worse is better" but also some sad stories about todays management (especially moving when he tells how the newly hired CEO always called in sick when layoffs had to be announced. If you need something to read at the beach - get it.

- Workshop on generative computing and model-driven-architecture

-

The computer science and media department at HDM offers another workshop on gen.comp. and MDA. This time we will see

-

a practical use of MDA at large companies (by Markus Reiter, Joachim Hengge - Softlab/HDM)

-

Automatic composition of business processes between companies - using semantic technologies and SOA. (by Christoph Diefenthal - Fraunhofer IAO/HDM)

-

Practical metamodelling techniques using Smalltalk (by Claus Gittinger, exept AG)

-

MDA used for a large scale enterprise application integration framework (by Marcel Rassinger, e2e Basel)

I will give a short introduction to what we did in generative computing this term and what we learned. Practical work included building a generative support package for Struts using eclipse modeling framework and JET, AspectJ applications, bytecode generation etc.

Like last time I expect some lively discussions around questions like:

Do you need generative approaches if you have a truly powerful programming language? (Are domain specific languages necessarily different from implementation languages? Do you always have to generate code or is intelligent interpretations of models a more flexible alternative?) Service-oriented architecture puts a lot of emphasis on semantic technologies lately (RDF(s), OWL). Is this competition to UML/MOF concepts by the OMG? is XML more than a dump format? Current limits of MDA use in Industries? How does one define custom semantics in modelling languages and which tools provide support for this in UML? What do employees need to learn to successfully use generative technologies? (Is there really some value in theoretical computer science? (;-)) Genertion without models - useful? What are the options (frame processing, templates etc.)? What are the limits? If you can read german I'd suggest to read the article by Markus Voelter on MDA in the latest object sprectrum magazine. It provides an overview to current issues with MDA. Download from Voelter's homepage or a short introduction to MDSD by Dave Frankel. The MDSD homepage has more resources to generative approaches. Softmetaware has a nice collection with MDA/MDSD tools e.g. OpenArchitectureWare, an open source framework for MDA/MDSD generation purposes. Or go for Jorn Bettin, Model-Driven Software Development which covers MDSD quite extensively.

-

- The german health card - security architecture

-

This term card systems where a major topic in my lecture on internet security. With the help of my students I tried to understand the large scale architecture of bit4health. First I'd like to show you which resources I used and then what we learned.

Most of the resources around the healthcard can be downloaded from DIMDI, a large medical database. But you should start with an article by Christiane Schulzki-Haddouti "Signaturbuendnis macht Dampf" (Thanks to Ralf Schmauder for the tip). She discusses the healthcard in context with two other big projects: the jobcard (see below) and the signature card. The plans for the healthcard are ambitious at least. It should be introduced in 2005 and everywhere available in 2006. But a lot of questions are still open:

Can an existing infrastructure e.g. from financial organisation be re-used? Which card-readers will be selected? Where will patients use them? What functionality will be provided on the card and which one on the network? And last but not least: who will issue, authorize, maintain, store etc. vital informations? But more important than the question when the card will be introduced is its architecture, especially the security architecture.

I started the analysis downloading two pdf files from dimdi. The papers provide a general overview and a more specific view on the security architecture. Interestingly, they use the terminology of the IBM Global Services Method which we have covered this term.

The papers are not bad but it is quite easy to get lost in high-level IT terminology and diagrams. The security architecture is based on RM-ODP - the reference model for distributed computing. This is an architecture that cleanly separates security services and mechanisms. The healthcard projects defines extensibility and interoperability as general goals and needs this separation. Security requirements are defined as well but again on a fairly high level.

The whole healthcard security design was still quite unclear after going through these papers. Then I found the "telematik buch" - written by two medical professionals. This book in turn gives you all the background information needed to understand the security problems behind the health card.

Again it became obvious that with large scale projects like the healthcard a top down analysis - starting with a security context diagram of the involved entities - is key to a sensible use of security technology. You just have to understand the problem domain to get the security right.

A small example: Between the doctors and the insurance organisations there exists an organization which collects the invoices from the doctors, does the invoice processing with the insurances and then hands back the money to the doctors. Of course there are security technologies which could establish a secure direct connection between the doctors and the insurances but this proposal would probably require changes in law.

Another example also from the book: In Germany patients can pick the doctor or hospital of their choice. A doctor can state the results of an examination and e.g. propose a treatment in a hospital but the receiver of this statement is unclear at the time of issuing. One consequence of this is that a public key based encryption (using the public key of the receiver) is not possible because the receiver is not know in advance.

Several technologies can mitigate this problem - ranging from storing all data on the patients healthcard to keeping all data on a network and storing only access keys on the smartcard.

The book also covers the financial background of the healthcards. It does an analysis of the current, mostly paper based procedures e.g. with pharmaceutical prescriptions, and calculates the costs involved. This gives a base for financial calculations of the possible expenses for a new solution. Doctors, pharmacies, hospitals and emergency vehicles all need to be equipped with the new technology but it is unclear where the benefits really are. And without benefits no investments will be made.

I will discuss two rather hot topics with the proposed smartcard based healthcard: Control of medical actions and decisions and the question of internet pharmacies. Once the medical professionals are all equipped with a "medical profession card" - which includes a digital signature - they can sign their reports and statements and put those signatures on the patients healthcard. Right now most of this information is kept in the doctors storage and is not protected against changes or loss. Stored on the patients card this information allows e.g. expert (systems) to check the correctness of a treatment and doctors will be obliged to study the information put there by colleagues. Many doctors tend to put forward concerns about the patients privacy if in reality they are concerned about becoming transparent with respect to the correct treatment.

The proposed healthcard system will require clear standards for medical data exchange. Repositories and schemas need to be defined and maintained. Once those are in place, the medical professional can confirm actions (like accepting a prescription and handing out a specific drug) with their signatures. A pharmacist can put this information right on the patients card and the patient can use it later on for reimbursement by the insurance. The pharmacy needs to install a smartcard reader/writer station. But how would this work on the internet? Besides legal issues, the patient needs a written statement by the pharmacy, signed and placed on the card. How would e.g. doc morries put this onto a patients card? If a patient owns a reader/writer - perhaps connected to a PC which in turn is connected to the internet - this would not be a problem (see the finread article below). But exactly this point has been left unspecified yet: Who will own/control the readers/writers? And a smartcard system without those will put the patients in a rather helpless position. And I doubt that the local pharmacy will allow their readers to be used for internet orders (;-)

- Finread Card Reader

-

The bit on the proposed healthcard above makes it rather clear that the reader/writer of smartcards plays a central role in every security architecture. The financial industry is very much aware of the current problems with PIN/TAN devices and the vulnerability of PCs as e-business devices. Finread is a proposed standard for a class 5 smartcard/reader writer which can run embedded software used to protect the smartcards. PC applications can still use card information but all access (in secure mode) is controlled by so called Finread Card Reader Applications running within the reader.

- Mobile Communication - how is it different to fixed, static networks?

-

Distributed systems have long suffered from an exaggerated quest for transparency. Hiding concurrency and remoteness from application programmers was both neccessary and dangerous at the same time. It seduced programmers to a programming style which disregarded the fact of distribution and ended with slow or bug-ridden software (best explained in the famous Waldo paper).

Mobile communication has the potential for the same misunderstandings and mistakes. Let me explain why - and at the same time tell you what I've learned from Jochen Schillers wonderful book on mobile communication.

Wireless networks have some important characteristics which make them very different from wired networks and which require a different thinking and way of programming. Good examples here are the problems of hidden and exposed terminals where some participants in wireless communication can reach some others but not all within a group. Event though a node or terminal cannot communicate with another node it can still be hindered by this node. E.g. because both nodes try to send messages at the same time which can make reception at a third node impossible. Schiller explains all those complications in the first chapters of the book.

Cell design of wireless networks can only be understood if one knows the many ways the wireless spectrum can be divided between participants: space, time, frequency and code separation are important concepts and Schiller excells in explaining them in a way that even more software oriented engineers can grasp the concepts. No need to be an electrical engineer here. These chapters in the book provide a solid base for the introduction of several wireless technologies ranging from satellite communicatione via GSM/UMTS to WLAN and Bluetooth. I was able to recognize the importance of home and visitor registries as the main patterns for roaming in wireless networks and ended with a good understanding of GSM architecture.

Then the fun really starts in the chapter on mobile communication on the network layer. Here Schiller shows how basic assumptions from fixed networks about availability, speed and routing in the network break down completely. Communication in wireless networks can be possible in one way but not the other. Counting transmit time using hops can be misleading and so on. And the biggest problems show up in the area of routing. Static routing schemes require an always-on state which will quickly drain the batteries of small mobile devices. Dynamic routing schemes are needed here.

The part on mobile IP is one of the best in the book. Schiller explains the problems mobile nodes face (note that this has NOTHING to do with wireless - just moving your laptop around the world). Solutions require agents in the home and target network and Schiller shows that tunneling in both directions is needed for transparent mobility. The security problems related to mobile ip are largely unsolved. They require manipulation of routing tables which are clearly a security problem if done without authorization. IPv6 will help here only to a certain degree.

Another highlight of the book is the part on TCP/IP for mobile devices. Did you know that some of the best algorithms used by TCP break down once they are used in wireless networks? Modern TCP assumes a congested network when packets are lost. Receivers throw away packets when they cannot keep up with the traffic and consequently TCP makes the senders fall back into a much slower sending mode quickly. But this is exactly the wrong behavior in wireless networks. Here lost packets are NOT a sign of a congested network. Instead, a nearby source of noise might have wiped out some packets and reducing the sending rate will simply decrease throughput. Schiller shows several improvements to TCP using proxies or different algorithms.

In the chapter on support infrastructures for mobile services Schiller discusses the big problems of mobile clients: Caching, disconnected operation with synchronization and replication issues, push technologies (like OTA), adaptation to device capabilities etc. He also explains why WAP over GSM had to fail and why iMode is a big success: Interactive applications (e.g. browsing) over a circuit switched connection just does not work. It is in this chapter where actually a new book starts - one on WAP2.0, mobile multimedia applications, mobile development environments and operating systems and so on. And not to forget security which seems to be quite critical with all the push technology used.

But what I liked best was all the information on ad-hoc networks. Self-organization using wireless connectivity could become a very important feature e.g. for 3rd world countries. It is comparable to the current drive toward peer-to-peer architectures in distributed systems. This technology has the potential to be a disruptive technology. If you want to learn more grep for "mesh networks" on google or got to Schillers homepage

- Automatic composition of web services into business processes using intermediates

-

Together with the Fraunhofer IAO, Christoph Diefenthal, a student of mine developed a system which automatically creates and runs a business process (in this case a procurement) between two companies. The companies involved need not adjust their web service interfaces. Transformation and conversion of services and parameters will be handled by an intermediate service which can run anywhere. But the companies need to create both a semantically rich description of their own service and what kind of services they expect from their partners. Those descriptions are input to the intermediate (flow) service which creates a combined process description and then translates it into a BPEL4WS format. A new web service is generated which implements and runs the process description.

The work uses several description schemas (RDF, RDFS, OWL, BPEL4WS) to achieve its goal. Some problems still exist though, especially in the area of security where the concept of end-to-end security and legal responsibility needs to be made compliant with web intermediates which act in place of the original requestors.

The (meta)-modelling approach will be presented by Christoph Diefenthal at our workshop on generative compouting and MDA at 1st July.

- The German Jobcard architecture - technical and political aspects of its security design

-

Ralf Schmauder sent me this link to a C'T article on the planned Jobcard . Very good article by Christiane Schulzki-Haddouti on the technical and political aspects of the jobcard proposal. The jobcard is basically a smartcard system that allows access to an employees data controlled by the employee herself. The data are all kept in a central location. (That's why access control is such a hot issues to get the "Datenschuetzer" off your back.

What I'd like to show here is that the whole jobcard architecture consists of two different parts. One part is the central storage service which technically controls all the employees data. Data creators like employers send their data to this place. There is no permission by the employee needed. But public organizations which want access to those data need an electronically signed statement from the employee - that's what the smartcard is for. This statement together with the request is verified by another organization and access to data is either granted or denied.

What is really important to note is that data storage and authorized access are two different, technically independent systems. It takes one legal change and the whole authorization system can be discarded - the data in the central storage made available to whichever organization is now entitled to them.

The problem is that the employees key does not really protect her data. It is used only to give permission for access. If you look at the central role of the storage service you can see why privacy advocates have all the reasons to get worried. Again, like in the case of the health card the difference is in how the smartcard is used. But without intelligent readers like finread card readers in the hands of the general public all smartcard solutions are rather a joke.

But the difference between existing (paper based architecture) and the future jobcard system is worth being compared. I am using here two diagrams from the article. The first one shows the current architecture with the employer maintaining one-to-one relations with the government organizations. Those one-to-one relations could easily be turned into e-services. A standard data format and a secure way of transport is all that is needed. But then every data sink would need a relation to the single data source - or get the data from another sink.

The current situation turned into e-services does not change much but it makes the privacy problems more visible which could cause political problems. A new system is needed that ensures data exchange but also satisfies privacy advocates.

The jobcard architecture as shown in this diagram is a publish-subscribe pattern. Watch how the employer has moved from the center of the system (the data source) to the side. A publish-subscribe patterns decouples consumers and producers from each other. Specifically it allows new consumers to be added without a need to bother the producers. Clearly a big difference to the one-to-one relations today.

This makes data interchange much easier than before and raises the question of who controls the data. The jobcard architecture therfor adds an authorization part to the system. Draw a line between the top 3 objects (employer, central storage and one consumer) and everything below that line belows to the authorization system.

The important question is now simply: for which purpose is the key on the employees smartcard used? The current proposal uses it to sign authorizations for data access. These authorizations are checked by the authorization system and access is granted.

But the key could also be used to encrypt and protect the data. This would put the employee really in control of her data. She could verify a request and if granted, send a version of her data to the receiver. But that would of course substantially reduce the political flexibility with respect to changing the rule for data access.

In one sentence: just cut away everything that is below the central storage service and you will have no technical problem accessing the private data - because the privacy is not in the data.

- New free computer science books - checked IBM Redbooks lately?

-

The last two newsletters from the redbooks site where choke-full with very interesting books and papers on current IT topics.

Enterprise Infrastructures and Architectures are quite difficult to grasp if you are new to the business. Lots of layers and many functionalities rest on different machines. Topics like clustering, single-sign-on and identity management are complicated but neccessary. The following redbooks covers those topics in detail and are not only applicable in the context of IBM products. Typical example: how do you configure a web server for SSL mode? How do you secure web infrastructures internally? Where do you store credentials? How do you solve delegation problems? How do you implement RBAC in a web application server context? Can you get your application server to use an LDAP? How does your server handle JAAS? If those terms are new to you - get those redbooks

But before I start with the redbooks I'd like to point you to two papers not from IBM: If you are interested in security, the Security Introduction from BEA is very much worth reading because the core terminology and principles behind distributed and application server security is introducted in a very readable way.

If you are interested in security services in distributed systems, get the Security Service Specification from OMG. It clarifies things like delegation etc. Not such an easy read but fundamental.

On security and identity management

-

Develop and Deploy a Secure Portal Solution. Very good. Covers concepts like MASS, authentication and authorization infrastructures and SSO.

-

Identity Management Design Guide with IBM Tivoli Identity Manager

-

Enterprise Security Architecture Using IBM Tivoli Security Solutions

-

IBM WebSphere V5.0 Security WebSphere Handbook Series. A classic. Covers almost EVERYTHING important in web application security in the context of application servers. SSO, RBAC, JAAS, X.509 certs etc.

On enterprise infrastructure: LDAP, caching

-

Understanding LDAP - Design and Implementation - Update. No need to buy an LDAP book. This one covers setting up LDAP directories as well as securing them.

-

Architecting High-End WebSphere Environments from Edge Server to EIS. Bring content as far as possible to the edge of your network or to your customers. Dynamically update edge caches from dynacache in your application server. Get information from your EIS systems.

On application server technology: system management, monitoring, clustering

-

Overview of WebSphere Studio Application Monitor and Workload Simulator. Always something that is considered too late in a project. Remember Lenin: trust is good but control is better..

-

IBM WebSphere Application Server V5.1 System Management and Configuration WebSphere Handbook Series. Distributed system management is essential if you want to run your web applications in a large scale environment.

-

IBM WebSphere V5.1 Performance, Scalability, and High Availability WebSphere Handbook Series. A monster book on clustering, availability and reliability.

-

Enterprise Integration with IBM Connectors. Very good and easy introduction to the J2EE connector architecture - a real core piece of J2EE.

On business integration with web services

-

Service Oriented Architecture and Web Services: Enterprise Service Bus. SOA is the new buzzword on the block.

-

Patterns: Implementing an SOA using an Enterprise Service Bus. The Enterprise Service Bus could well be the future architecture for all EAI systems.

-

- E-voting at the euro and community elections in Germany - Koblenz rulez?

-

Dennis Voelker sent me a link to a Heise newsticker article on e-voting in Germany . According to this report the city of Koblenz used an e-voting machine for the euro and community elections last Sunday. "And it worked flawlessly". At the small price of a half a million Euro.

Just a few questions to the head of election central:

-

What do you mean by "worked flawlessly"? Do you mean that the machines did not crash during the voting office hours? Or do you claim a correct election based on the fact that there where no major delays or queues in front of the booth? According to the report the queues where not longer than in previous years. Is that good? Is that still good enough for 500.000 Euros spent?

-

How do you get proof of a correct election? Do you base your statements on the correctness of software and hardware which you derive in turn from testing and certification principles during manufacturing? Or did you install a system that allows end-to-end verification by voters and which is independent of the voting machines?

-

The head of election central is quoted in this article saying that the major reason for implementing the voting machines is to save time - in other words to get the results earlier. In this case a couple of hours after the voting booth closes. Has waiting some time for the result of a vote been a problem lately? Has somebody been complaining? How come that in times like these when public money is scarce a city spends 500.000 Euro on a system that will be used EVERY FOUR YEARS (Ok, let's say two years) and which will give us a couple of hours less waiting time for the results?

-

I don't want to beat a dead horse here but I just can't make a case for e-voting. Does it save money? Most helpers during elections in Germany are unpaid. People can easily wait a bit more for results - Remember: the machines do not promise A BETTER RESULT (;-) - In this case the money would be well spent. All in all this whole issue looks like a solution looking for a problem.

Note

Could an e-voting savvy person perhaps tell me the rationale behind the push for evoting? And perhaps answer those questions above as well?

-

- Beyond Fear Tour 2004

-

11-12 of May 2004 I was touring with students of the HDM through the south-west corner of Germany and Switzerland.

This was a part of our yearly week reserved for field trips. Our field trip had the topic security/safety and we did it with our motorbikes. Originally we had planned to take part in a bike safety training one afternoon but this did not work out: our group was not big enough. Perhaps next year. Our service team

This was a part of our yearly week reserved for field trips. Our field trip had the topic security/safety and we did it with our motorbikes. Originally we had planned to take part in a bike safety training one afternoon but this did not work out: our group was not big enough. Perhaps next year. Our service team  (;-) did a great job in supporting us bikers - only every once in a while they got lost and ended up on a smaller mountain (;-)

(;-) did a great job in supporting us bikers - only every once in a while they got lost and ended up on a smaller mountain (;-)

The pictures where taken by Marco Zugelder and Dietmar Tochtermann and you can find more at Marco's homepage . How inventive students in computer science can be if needed: Dietmar's custom made read light....

It was a wonderful trip and next year we will take at least 3 days for our excursion.

- On Motorbikes and Security, more?

-

What have motorbike safety and IT-Security in common? The answer is simply the attitude towards risk. In both areas you can watch the full range of behavior towards risk: From denying it (nothing's gonna happen) to strict risk avoidance (you must be nuts to ride a bike). From doing frequent exercises to riding only rarely. And tons of myths about security: "If you drive only a bit on Sundays it is very unlikely to get killed - the risk increases with the miles you drive". In IT terms this is equivalent to statements like: "linux is safer than xx". First: the so called sunday driver is not at all safer from being killed because if he gets into a critical situation very likely he has no practised response to it. And blunt statements about the security of operating systems have too many hidden caveats to be of real value. Linux may be safer than xx IFF you know it well AND keep it up to date AND have good system management practices etc.

Fear is an imporant element of riding a bike. Too strong, fear turns into panic and kills you easily. In fact - many serious accidents with bikes are the result of panic reactions. A very common and deadly one being the inability to increase the lean angle in curves beyond practised limits in case of an emergency. Drivers seemingly glued to their bikes then tend to crash straight into the opposite traffic. So for a biker it is a neccessary survival skill to go "beyond fear". If you want to learn more about this read Die obere Haelfte des Motorrads by Bernd Spiegel, a behavioral scientiest and seasoned bike trainer.

Is that all there is about bikes and security? No. Motorbikes have one quality that makes them very special: a notion of freedom which shows e.g. in wildly different bikes - many of them modified by their owners - and biker habits.

Bikers insist on their freedom to ride and live in a certain way - even if this puts them at risk sometimes. They tolerate a certain amount of safety features like helmets. But they would reject the full body armor because it would kill the fun in riding a bike. And that's where the whole things becomes political as well: For years government and industry have tried to establish new rules and standards, e.g. euro-helmets, standards for biker clothes etc. Some of it was quite useful, some only served the interests of the industry. And some rules like closing certain roads for bikers are trying to influence the trade-offs between safety/risk/fun/common interests etc. and get dangerously close to taking away the freedom to move. But it showed clearly that safety or security is a TRADE-OFF between different values.

Bikers insist on their freedom to ride and live in a certain way - even if this puts them at risk sometimes. They tolerate a certain amount of safety features like helmets. But they would reject the full body armor because it would kill the fun in riding a bike. And that's where the whole things becomes political as well: For years government and industry have tried to establish new rules and standards, e.g. euro-helmets, standards for biker clothes etc. Some of it was quite useful, some only served the interests of the industry. And some rules like closing certain roads for bikers are trying to influence the trade-offs between safety/risk/fun/common interests etc. and get dangerously close to taking away the freedom to move. But it showed clearly that safety or security is a TRADE-OFF between different values.The idea to offer an IT-Security related tour on motorbikes for students and colleages is about a year old. And it got its name when Bruce Schneier's latest book Beyond fear was published. In this book Schneier reminds us that security is a TRADEOFF between risk and gain. It does not mean complete risk avoidance. And he also shows that freedom (individually and on the scale of a society) is tied to taking risks.

Like with bikes fear is a common concept in security - why would one think about security if not for fear of something? And like in the case of bikes fear can be counter productive. Fear is a basic instinct and neither bikes nor security questions (e.g. risks of terror attacks) are well suited to reactions based on basic instincts.

He tries to put security back into perspective by asking 5 crucial questions for each proposed solution:

What are the assets that you want to protect? What are the risks to those assets? How well does the security solution mitigate those risks? What other risks are caused by the security solution? What costs and trade-offs does the security solution impose? (Taken from Beyond Fear, page 14). Schneier puts security back into its social context and makes its political and social side visible. He demonstrates his approach using many security measures put in place after 9/11 by the US government but which turn out to be mostly useless of even damaging to individuals or society. (It was only yesterday that the FBI announced for the Xth time vague warnings on terroristic threats - nothing specific (sic) for the summer. For an unknown reason the color based risk levels currently used in the USA have not been adjusted to this. Perhaps somebody simply could not figure out what "orange" would really mean compared to "green" or "red" and that the risks are always there but life goes on?). "Nothing specific" - yes, just enough to scare the some percent of the population into ridiculous safety measures (duct tapes etc....)

But I'd like to ask those questions also on a much smaller scale: within companies e.g. What is the right kind of IT-security for a campus? for a public broadcast station (see below)? What are security processes really worth and would do they really cost? OK, so sharing of a user ID and password between colleagues is a risk because there is trust involved in this, e.g. by somebody going on vacation giving her credentials to a colleague so that work can go on uninterrupted. Yes, there is a risk that this trust can be abused. But how many times is this really the case? And what does this obvious sign of trust mean for teams and their informal relations, motivation etc.? It is true - security problems start with trust. But what kind of problems do you experience without trust?

And Schneiers questions even work on the very small - individual- level, e.g. with motorbikes. Is the only proper response to a risk assessment for motorbikes really to no longer ride a bike? Or are the trade-offs of this security measure simply too high? This leads back to the important statement that security is not about risk avoidance. It is about risk management. Here is a place to learn more about bike safety: Stehlin bike training Which means there is a lot you can do to improve safety and security on a bike (I do not really distinguish between these terms here for two reasons: a) we don't have two different words for this in german - it's all "Sicherheit" and b) a lot of things happening on a bike are intentional and belong therefor to the security category, even if it's your own intentions (;-).